‘I feel lost’ – AI pioneer speaks out as experts warn it could wipe out humanity

One of the “godfathers” of artificial intelligence (AI) has said he feels “lost” as experts warned the technology could lead to the extinction of humanity. Professor Yoshua Bengio told the BBC that all companies building AI products should be registered and people working on the technology should have ethical training. It comes after dozens of experts put their name to a letter organised by the Centre for AI Safety, which warned that the technology could wipe out humanity and the risks should be treated with the same urgency as pandemics or nuclear war. Prof Bengio said: “It is challenging, emotionally speaking, for people who are inside (the AI sector). It's exactly like climate change. We've put a lot of carbon in the atmosphere. And it would be better if we hadn't, but let's see what we can do now Professor Yoshua Bengio “You could say I feel lost. But you have to keep going and you have to engage, discuss, encourage others to think with you.” Senior bosses at companies such as Google DeepMind and Anthropic signed the letter along with another pioneer of AI, Geoffrey Hinton, who resigned from his job at Google earlier this month, saying that in the wrong hands, AI could be used to to harm people and spell the end of humanity. Experts had already been warning that the technology could take jobs from humans, but the new statement warns of a deeper concern, saying AI could be used to develop new chemical weapons and enhance aerial combat. AI apps such as Midjourney and ChatGPT have gone viral on social media sites, with users posting fake images of celebrities and politicians, and students using ChatGPT and other “language learning models” to generate university-grade essays. But AI can also perform life-saving tasks, such as algorithms analysing medical images like X-rays, scans and ultrasounds, helping doctors to identify and diagnose diseases such as cancer and heart conditions more accurately and quickly. Last week Prime Minister Rishi Sunak spoke about the importance of ensuring the right “guard rails” are in place to protect against potential dangers, ranging from disinformation and national security to “existential threats”, while also driving innovation. He retweeted the Centre for AI Safety’s statement on Wednesday, adding: “The government is looking very carefully at this. Last week I stressed to AI companies the importance of putting guardrails in place so development is safe and secure. But we need to work together. That’s why I raised it at the @G7 and will do so again when I visit the US.” Prof Bengio told the BBC all companies building powerful AI products should be registered. “Governments need to track what they’re doing, they need to be able to audit them, and that’s just the minimum thing we do for any other sector like building aeroplanes or cars or pharmaceuticals,” he said. “We also need the people who are close to these systems to have a kind of certification… we need ethical training here. Computer scientists don’t usually get that, by the way.” Prof Bengio said of AI’s current state: “It’s never too late to improve. “It’s exactly like climate change. We’ve put a lot of carbon in the atmosphere. And it would be better if we hadn’t, but let’s see what we can do now.” We don't quite know how to understand the absolute consequences of this technology Professor Sir Nigel Shadbolt Oxford University expert Sir Nigel Shadbolt, chairman of the London-based Open Data Institute, told the BBC: “We have a huge amount of AI around us right now, which has become almost ubiquitous and unremarked. There’s software on our phones that recognise our voices, the ability to recognise faces. “Actually, if we think about it, we recognise there are ethical dilemmas in just the use of those technologies. I think what’s different now though, with the so-called generative AI, things like ChatGPT, is that this is a system which can be specialised from the general to many, many particular tasks and the engineering is in some sense ahead of the science. “We don’t quite know how to understand the absolute consequences of this technology, we all have in common a recognition that we need to innovate responsibly, that we need to think about the ethnical dimension, the values that these systems embody. “We have to understand that AI is a huge force for good. We have to appreciate, not the very worst, (but) there are lots of existential challenges we face… our technologies are on a par with other things that might cut us short, whether it’s climate or other challenges we face. “But it seems to me that if we do the thinking now, in advance, if we do take the steps that people like Yoshua is arguing for, that’s a good first step, it’s very good that we’ve got the field coming together to understand that this is a powerful technology that has a dark and a light side, it has a yin and a yang, and we need lots of voices in that debate.” Read More Charity boss speaks out over ‘traumatic’ encounter with royal aide Ukraine war’s heaviest fight rages in east - follow live Cabinet approves Irish involvement in cyber-threat network Trust and ethics considerations ‘have come too late’ on AI technology Mitigating ‘extinction’ from AI should be ‘global priority’, experts say

2023-05-31 19:29

Ryder Named Among Food Logistics’ Top 3PL & Cold Storage Providers to Food & Beverage Industry for 11th Consecutive Year

MIAMI--(BUSINESS WIRE)--May 31, 2023--

2023-05-31 19:24

Does xQc co-own 'GTA' roleplay server NoPixel? Who are his other partners?

xQc announced that he was co-owner of the well-known 'GTA' roleplay server NoPixel

2023-05-31 19:24

India to launch electronics repair pilot project

BENGALURU India is launching a pilot project aimed at stimulating its electronics repair outsourcing industry by relaxing some

2023-05-31 19:23

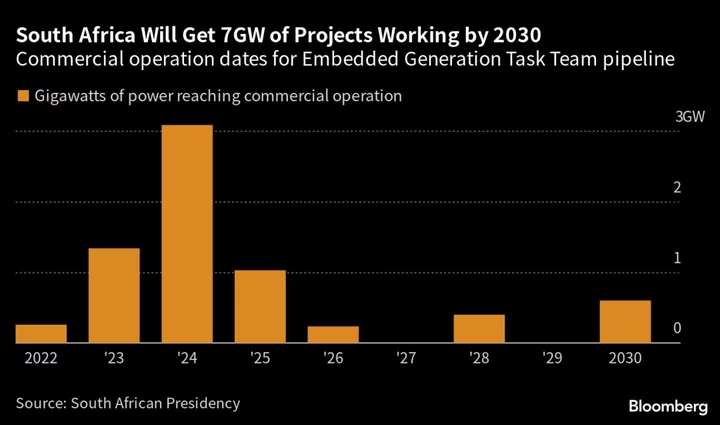

South Africa Says Private Companies to Add Four Gigawatt to Grid by End 2024

South Africa expects private companies to add more than 4 gigawatts of electricity generation capacity to the grid

2023-05-31 19:22

Advanced Test Equipment Corporation Becomes Authorized Rental Partner for Anritsu

SAN DIEGO--(BUSINESS WIRE)--May 31, 2023--

2023-05-31 19:16

EU tech chief calls for voluntary AI code of conduct within months

By Philip Blenkinsop LULEA, Sweden The United States and European Union should push the artificial intelligence (AI) industry

2023-05-31 19:15

Thea Booysen: 5 unknown facts about fan-favorite YouTuber MrBeast's girlfriend

MrBeast and Thea Booysen reportedly started dating in February 2022, after the former broke up with Maddy Spidell

2023-05-31 18:59

Voices: The real reason companies are warning that AI is as bad as nuclear war

They are 22 words that could terrify those who read them, as brutal in their simplicity as they are general in their meaning: “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.” That is the statement from San Francisco-based non-profit the Center for AI Safety, and signed by chief executives from Google Deepmind and ChatGPT creators OpenAI, along with other major figures in artificial intelligence research. The fact that the statement has been signed by so many leading AI researchers and companies means that it should be heeded. But it also means that it should be robustly examined: why are they saying this, and why now? The answer might take some of the terror away (though not all of it). Writing a statement like this functions as something like a reverse marketing campaign: our products are so powerful and so new, it says, that they could wipe out the world. Most tech products just promise to change our lives; these ones could end it. And so what looks like a statement about danger is also one that highlights just how much Google, OpenAI and more think they have to offer. Warning that AI could be as terrible as pandemics also has the peculiar effect of making artificial intelligence's dangers seem as if they just arise naturally in the world, like the mutation of a virus. But every dangerous AI is the product of intentional choices by its developers – and in most cases, from the companies that have signed the new statement. Who is the statement for? Who are these companies talking to? After all, they are the ones who are creating the products that might extinguish life on Earth. It reads a little like being hectored by a burglar about your house’s locks not being good enough. None of this is to say that the warning is untrue, or shouldn't be heeded; the danger is very real indeed. But it does mean that we should ask a few more questions of those warning us about it, especially when they are conveniently the companies that created this ostensibly apocalyptic tech in the first place. AI doesn't feel so world-destroying yet. The statement's doomy words might come as some surprise to those who have used the more accessible AI systems, such as ChatGPT. Conversations with that chatbot and others can be funny, surprising, delightful and sometimes scary – but it's hard to see how what is mostly prattle and babble from a smart but stupid chatbot could destroy the world. They also might come as a surprise to those who have read about the many, very important ways that AI is already being used to help save us, not kill us. Only last week, scientists announced that they had used artificial intelligence to find new antibiotics that could kill off superbugs, and that is just the beginning. By focusing on the "risk of extinction" and the "societal-scale risk" posed by AI, however, its proponents are able to shift the focus away from both the weaknesses of actually existing AI and the ethical questions that surround it. The intensity of the statement, the reference to nuclear war and pandemics, make it feel like we are at a point equivalent with cowering in our bomb shelters or in lockdown. They say there are no atheists in foxholes; we might also say there are no ethicists in fallout shelters. If AI is akin to nuclear war, though, we are closer to the formation of the Manhattan Project than we are to the Cold War. We don’t need to be hunkering down as if the danger is here and there is nothing we can do about it but “mitigate it”. There's still time to decide what this technology looks like, how powerful it is and who will be at the sharp end of that power. Statements like this are a reflection of the fact that the systems we have today are a long way from those that we might have tomorrow: the work going on at the companies who warned us about these issues is vast, and could be much more transformative than chatting with a robot. It is all happening in secret, and shrouded in both mystery and marketing buzz, but what we can discern is that we might only be a few years away from systems that are both more powerful and more sinister. Already, the world is struggling to differentiate between fake images and real ones; soon, developments in AI could make it very difficult to find the difference between fake people and real ones. At least according to some in the industry, AI is set to develop at such a pace that it might only be a few years before those warnings are less abstractly worrying and more concretely terrifying. The statement is correct in identifying those risks, and urging work to avoid them. But it is more than a little helpful to the companies that signed it in making those risks seem inevitable and naturally occurring, as if they are not choosing to build and profit from the technology they are so worried about. It is those companies, not artificial intelligence, that have the power to decide what that future looks like – and whether it will include our "extinction". Read More Opinion: Age gap relationships might seem wrong, but they work. Trust me Hands up if you trust Boris Johnson | Tom Peck Boris’s ‘ratty rat’ rage against Sunak could bring the Tories down | John Rentoul Opinion: Age gap relationships might seem wrong, but they work. Trust me Hands up if you trust Boris Johnson | Tom Peck Boris’s ‘ratty rat’ rage against Sunak could bring the Tories down | John Rentoul

2023-05-31 18:58

TikTok: Divorce lawyer warns women to 'stay away' from 'controlling' men with these 5 'narcissistic' jobs

The divorce lawyer gave her assessment based on seeing the recurring patterns in her 13 years of experience

2023-05-31 18:54

Karpowership Appeals Saldanha Application Extension Refusal

Karpowership, the Turkish company seeking to install ship-mounted power plants in South African ports, is appealing a decision

2023-05-31 18:49

Why is MrBeast not happy with Elon Musk? Twitter fiasco involving AOC explored

YouTuber MrBeast is evidently dissatisfied with how Twitter boss Elon Musk handled the drama involving a fake account in the name of AOC

2023-05-31 18:28