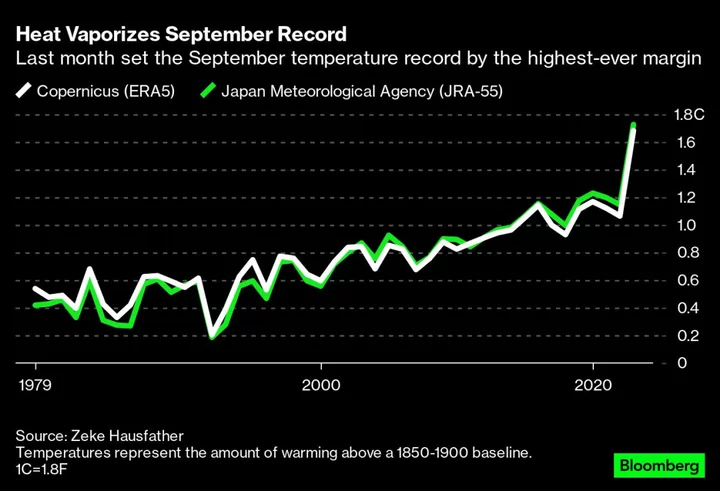

In Pictures: 2023 Extreme Heat Unleashed Rain Like Never Before

What’s likely to be the hottest year on record brought never-before-seen rain to five continents, killing thousands of

2023-11-01 15:45

New Orleans facial recognition tool mostly used against Black suspects

After the New Orleans City Council voted to allow the use of facial recognition software to identify criminals more readily and accurately, reports indicate that the technology was ineffective and erroneous. This system went into effect in the summer of 2022, and Politico obtained records of the year’s worth of results. The outlet found that not only was the facial recognition tool vastly incapable of identifying suspects, but it was also disproportionately used on Black people. And from October 2022 to August 2023, almost every facial recognition request regarded a Black suspect. Politico reported that in total, the department made 19 requests. However, two of them were thrown out because police had identified the suspect before the system’s results came back, while two others were rejected because the program’s application didn’t extend to those crimes. So, of the 15 requests made by the New Orleans Police Department, 14 concerned Black suspects, the outlet wrote. On top of this, only six of these requests turned up with matches — and half of those were erroneous — while the remaining nine did not pull up a match. Facial recognition technology has long been controversial. The city of New Orleans previously had banned the use of facial recognition software, which went into effect in 2020 following the death of George Floyd. Then, in 2022, the city reversed course, allowing it to be used. In the wake of the reversal, the ACLU of Louisiana Advocacy Director Chris Kaiser called the new ordinance “deeply flawed.” He not only pointed out research that indicated that “racial and gender bias” affected the program’s accuracy but also highlighted privacy concerns around the data that the program relies on when identifying potential suspects. A previous investigation by The Independent revealed that at least six people around the US have been falsely arrested using facial ID technology; all of them are Black. One such arrest occurred in Louisiana, where the use of facial recognition technology led to the wrongful arrest of a Georgia man for a string of purse thefts. Regardless of the false arrests, at least half of federal law enforcement agencies with officers and a quarter of state and local agencies are using it. At least one council member acknowledged the shortcomings of this technology. “This department hung their hat on this,” New Orleans Councilmember At-Large JP Morrell told Politico. Mr Morrell voted against using facial recognition last year. After seeing the police department’s data and usage, he said the tool is “wholly ineffective and pretty obviously racist.” “The data has pretty much proven that advocates were mostly correct,” Mr Morell continued. “It’s primarily targeted towards African Americans and it doesn’t actually lead to many, if any, arrests.” City councillor Eugene Green, who introduced the measure to lift the ban, holds a different view. He told Politico that he still supports the agency’s use of facial recognition. “If we have it for 10 years and it only solves one crime, but there’s no abuse, then that’s a victory for the citizens of New Orleans.” It is important to note that despite hiccups with the system’s results, the agency’s use has led to any known false arrests. “We needed to have significant accountability on this controversial technology,” council member Helena Moreno, who co-authored the initial ban, told the outlet. New Orleans has a system in place in which the police department is required to provide details of how the tool was used to the City Council on a monthly basis; although Politico disclosed that the department agreed with the council that it could share the data quarterly. When asking about the potential flaws with the facial recognition tool, as outlined by Politico’s reporting, a New Orleans Police Department spokesperson told The Independent that “race and ethnicity are not a determining factor for which images and crimes are suitable for Facial Recognition review. However, a description of the perpetrator, including race, is a logical part of any search for a suspect and is always a criterion in any investigation.” The department spokesperson also emphasised that its investigators do not rely solely on facial recognition, “but it is one of multiple tools that can be used to aid in investigations,” like evidence and/or forensics, adding that officers are trained to conduct “bias-free investigations.” “The lack of arrests in which Facial Recognition Technology was used as a tool, is evidence that NOPD investigators are being thorough in their investigations,” the statement concluded. Read More Cousins may have Achilles tendon injury; Stafford, Pickett, Taylor also hurt on rough day for QBs Four tracts of federal waters in the Gulf of Mexico are designated for wind power development A salty problem for people near the mouth of the Mississippi is a wakeup call for New Orleans Gulf oil lease sale postponed by court amid litigation over endangered whale protections What is super fog? The mix of smoke and dense fog caused a deadly pileup in Louisiana What is super fog? Weather phenomenon causes fatal Louisiana pile-up

2023-11-01 06:49

European Nations Join Island States in Calling for Fossil-Fuel Phaseout

An influential alliance including several European countries and island states has thrown its weight behind a commitment to

2023-10-31 21:16

Why Biden is so concerned about AI

President Joe Biden is addressing concerns about artificial intelligence as the administration attempts to guide the development of the rapidly evolving technology. The White House said on Monday (30 October) that a sweeping executive order will address concerns about safety and security, privacy, equity and civil rights, the rights of consumers, patients, and students, and supporting workers. The order will also hand a list of tasks to federal agencies to oversee the development of the technology. ‘We have to move as fast, if not faster than the technology itself’ “We can’t move at a normal government pace,” White House Chief of Staff Jeff Zients quoted Mr Biden as telling his staff, according to the AP. “We have to move as fast, if not faster than the technology itself.” Mr Biden believes that the US government was late to the game to take into account the risks of social media, leading to the related mental health issues now seen among US youth. While AI may help drastically develop cancer research, foresee the impacts of the climate crisis, and improve the economy and public services, it may also spread fake images, audio and videos, with possibly widespread political consequences. Other harmful effects include the worsening of racial and social inequality and the possibility that it can be used to commit crimes, such as fraud. The president of the Center for Democracy & Technology, Alexandra Reeve Givens, told the AP that the Biden administration is using the tools at their disposal to issue “guidance and standards to shape private sector behaviour and leading by example in the federal government’s own use of AI”. Mr Biden’s executive order comes after technology companies have already made voluntary commitments, and the aim is that congressional legislation and international action will follow. The White House got commitments earlier this year from Google, Meta, Microsoft, and OpenAI to put in place safety standards when building new AI tools and models. Monday’s executive order employs the Defense Production Act to require AI developers to share safety test results and other data with the government. The National Institute of Standards and Technology is also set to establish standards governing the development and use of AI. Similarly, the Department of Commerce will publish guidance outlining the labelling and watermarking of content created using AI. An administration official told the press on Sunday that the order is intended to be implemented within between 90 days and a year. Safety and security issues have the tightest deadlines. Mr Biden met with staff last Thursday for a half-hour meeting that grew into an hour and 10 minutes to put the finishing touches on the order. Biden ‘impressed and alarmed’ by AI The president was engaged in meetings about the technology in the months that preceded Monday’s order signing, meeting twice with the Science Advisory Council to discuss AI and bringing up the technology during two cabinet meetings. At several gatherings, Mr Biden also pushed tech industry leaders and advocates regarding what the technology is capable of. Deputy White House Chief of Staff Bruce Reed told the AP that Mr Biden “was as impressed and alarmed as anyone”. “He saw fake AI images of himself, of his dog,” he added. “He saw how it can make bad poetry. And he’s seen and heard the incredible and terrifying technology of voice cloning, which can take three seconds of your voice and turn it into an entire fake conversation.” The AI-created images and audio prompted Mr Biden to push for the labelling of AI-created content. He was also concerned about older people getting a phone call from an AI tool using a fake voice sounding like a family member or other loved one for the purpose of committing a scam. Meetings on AI often went long, with the president once telling advocates: “This is important. Take as long as you need.” Mr Biden also spoke to scientists about the possible positive impacts of the technology, such as explaining the beginning of the universe, and the modelling of extreme weather events such as floods, where old data has become inaccurate because of the changes caused by the climate crisis. ‘When the hell did I say that?’ On Monday at the White House, Mr Biden addressed the concerns about “deepfakes” during a speech in connection with the signing of the order. “With AI, fraudsters can take a three-second recording of your voice, I have watched one of me on a couple of occasions. I said, ‘When the hell did I say that?’” Mr Biden said to laughter from the audience. Mr Reed added that he watched Mission: Impossible — Dead Reckoning Part One with Mr Biden one weekend at Camp David. At the beginning of the film, the antagonist, an AI called “the Entity”, sinks a submarine, killing its crew. “If he hadn’t already been concerned about what could go wrong with AI before that movie, he saw plenty more to worry about,” Mr Reed told the news agency. The White House has faced pressure from a number of allied groups to address possible harmful effects of AI. The director of the racial justice programme at The American Civil Liberties Union, ReNika Moore, told the AP that the union met with the administration to make sure “we’re holding the tech industry and tech billionaires accountable” so that the new tools will “work for all of us and not just a few”. Ex-Biden official Suresh Venkatasubramanian told the news agency that law enforcement’s use of AI, such as at border checkpoints, is one of the top challenges. “These are all places where we know that the use of automation is very problematic, with facial recognition, drone technology,” the computer scientist said. Read More Biden reacts to watching deepfakes of himself: ‘When the hell did I say that?’ Rishi Sunak to hold live chat with Elon Musk during AI summit Liz Truss ‘deeply disturbed’ by Sunak’s invitation to China to attend AI summit Extinction risk from AI on same scale as nuclear war, Sunak warns Revealed: Government using AI to decide on benefits and driving licences Brexit means UK can be global leader on AI, says Facebook co-founder

2023-10-31 04:27

Karpowership Wins Environment Permit for South African Power Plant

Karpowership won environmental authorization for one of three ship-mounted power plants it wants to connect to the South

2023-10-27 13:57

Emerging World Needs $1.5 Trillion for Green Buildings, IFC Says

The International Finance Corporation is looking to develop a guarantee facility for private investors to boost finance for

2023-10-25 21:59

Students told ‘avoid all robots’ after Oregon University bomb threat prank

Students at the Oregon State University were warned to “avoid all robots” following a bomb threat prank involving automated food delivery machines on campus. The threat was made by a student on Tuesday via social media, causing university staff to issue the urgent warning. “Bomb Threat in Starship food delivery robots. Do not open robots. Avoid all robots until further notice. Public Safety is responding,” the institute wrote on X, formerly known as Twitter. The university later provided several updates on the unfolding situation, saying that the robots had been isolated in a “safe location” before being inspected by a technician. Students were advised to “remain vigilant for suspicious activity”. The emergency was declared over just before 2pm local time with “normal activities” resuming. “All robots have been inspected and cleared. They will be back in service by 4pm today,” the university later wrote online. Starship, the company that designs the robots, said that despite the student’s subsequent admission that the bomb threat had been “a joke”, it had suspended the service while investigations were ongoing. In its own statement, the company wrote: “A student at Oregon State University sent a bomb threat, via social media, that involved Starship’s robots on the campus. “While the student has subsequently stated this is a joke and a prank, Starship suspended the service. “Safety is of the utmost importance to Starship and we are cooperating with law enforcement and the university during this investigation.” Read More University of Michigan slithers toward history with massive acquisition of jarred snake specimens Trump boasts that he ‘killed’ Tom Emmer’s speaker bid ‘Bandaid on an open chest wound’: Democrats mock latest speaker chaos

2023-10-25 09:25

California suspends Cruise driverless taxi test after accident

Autonomous carmaker Cruise must suspend its driverless taxi operations in California immediately, state motor vehicles regulators announced on Tuesday. "The California DMV today notified Cruise that the department is suspending Cruise’s autonomous vehicle deployment and driverless testing permits, effective immediately,” the state Department of Motor Vehicles said in a statement. “The DMV has provided Cruise with the steps need to apply to reinstate its suspended permits, which the DMV will not approve until the company has fulfilled the requirements to the department’s satisfaction.” The regulator said it has the right to pull back permissions when “there is an unreasonable risk to public safety.” The suspension, which only applies to Cruise trips where no human safety driver is onboard the vehicle, follows an incident earlier this month, where a woman in San Francisco was struck by a human driver in a hit-and-run accident that propelled her into the path of a Cruise robotaxi. “Ultimately, we develop and deploy autonomous vehicles in an effort to save lives,” Cruise said in a statement to ABC7. “In the incident being reviewed by the DMV, a human hit and run driver tragically struck and propelled the pedestrian into the path of the AV. The AV braked aggressively before impact and because it detected a collision, it attempted to pull over to avoid further safety issues. When the AV tried to pull over, it continued before coming to a final stop, pulling the pedestrian forward.” “Our thoughts continue to be with the victim as we hope for a rapid and complete recovery,” the company added. The suspension is a major blow to Cruise, which is owned by General Motors. Alongside Waymo, a subsidiary of Google parent company Alphabet, Cruise saw California, and in particular San Francisco, as a key testing ground of driverless taxi technology. The companies both got permission from state regulators in August to conduct paid taxi service 24/7 without a safety driver in San Francisco, despite vigorous debate in the city over whether the AVs were safe enough to operate. The rollout of robotaxis in San Francisco has been marred with problems. Driverless cars, in particular Cruise taxis, were accused of causing traffic and impeding first responders. According to data Cruise shared with the state in August, between January and mid-July of 2023, Cruise AVs temporarily malfunctioned or shut down 177 times and required recovery, 26 of which such incidents occurred with a passenger inside, while Waymo recorded 58 such events in a similar time frame. Meanwhile, according to the San Francisco Municipal Transit Agency (SFMTA), between April 2022 and April 2023, Cruise and Waymo vehicles have been involved in over 300 incidents of irregular driving including unexpected stops and collisions, while the San Francisco Fire Department says AVs have interfered 55 times in their work in 2023. Last year, Cruise lost contact with its entire fleet for 20 minutes according to internal documentation viewed by WIRED, and an anonymous employee warned California regulators that year the company loses touch with its vehicles “with regularity.” Since being rolled out in San Francisco, robotaxis have killed a dog, caused a mile-long traffic jam during rush hour, blocked a traffic lane as officials responded to a shooting, and driven over fire hoses. Jeffrey Tumlin, San Francisco’s director of transportation, has called the rollout of robotaxis a “race to the bottom,” arguing Cruise and Waymo weren’t yet definitive transit solutions, and instead had only “met the requirements for a learner’s permit.” Others have argued the introduction of driverless cars in San Francisco and beyond will further displace workers pushed out of the taxi industry by companies like Uber and Lyft. Read More Live updates: Republicans nominate Tom Emmer for House speaker New doc on the wrestling abuse that dogged Jim Jordan’s Speaker run Trump slams ‘Globalist RINO’ Tom Emmer after speaker nomination win Live updates: Republicans nominate Tom Emmer for House speaker New doc on the wrestling abuse that dogged Jim Jordan’s Speaker run Trump slams ‘Globalist RINO’ Tom Emmer after speaker nomination win

2023-10-25 03:52

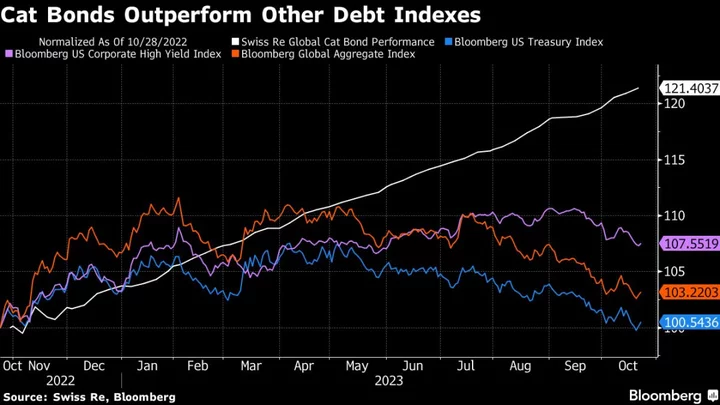

“Catastrophe” Bond Market Headed for Major Surge in Issuance

The market for catastrophe bonds, one of this year’s best-performing debt classes, is about to see a significant

2023-10-24 20:47

Keeping Old Eskom Plants Post-2030 May Kill 15,000, Study Shows

Suspending a plan to retire 11,300 megawatts of South African coal-fired power generation to ease blackouts could lead

2023-10-24 20:23

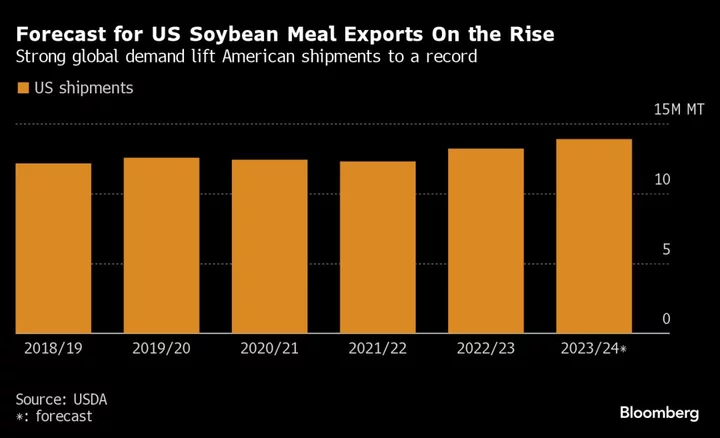

Biofuel Boom to Push US Soy Meal Exports to Record High

Shipments of US soybean meal to other countries are expected to climb to a record high next year

2023-10-24 04:51

Asset Managers Are Updating Bond Models to Capture a New Risk

A growing number of asset managers is reassessing bond values tied to real assets, as a spike in

2023-10-22 21:22