Meta faces renewed criticism over end-to-end encryption amid child safety fears

Child protection experts have fiercely criticised social media giant Meta over its plans for end-to-end encryption, accusing the tech firm of prioritising profit over children’s safety. Simon Bailey, a former police chief constable who was national lead for child protection at the National Police Chiefs’ Council, accused Meta of a “complete loss of social and moral responsibility” over the plans. John Carr, who is secretary of a coalition of UK children’s charities to deal with internet safety, called the move “utterly unconscionable”. Their comments came after head of the National Crime Agency Graeme Biggar said introducing end-to-end encryption on Facebook would be like “consciously turning a blind eye to child abuse”. Speaking at a lecture in Westminster earlier this month, the law enforcement chief said it should be up to the government rather than technology companies to draw the line between privacy and child safety. Meta responded by saying it has robust measures in place to combat abuse and that it expects to make more reports to law enforcement after end-to-end encryption is brought in. Mr Bailey said as he had seen the scale of online sexual abuse grow, he also saw “big tech companies, like Meta, absolve themselves of any responsibility when it comes to tackling online child sexual abuse”. The former chief constable said: “Big tech facilitates and, through their algorithms, encourages this abuse to take place. It is time their complete loss of social and moral responsibility is highlighted and challenged Simon Bailey “In response to what they know and can see as a global pandemic of online child sexual abuse, they have consciously decided to take the easy way out of dealing with the problem. “Meta, one of the largest carriers of this abuse, has decided to implement end-to-end encryption by default, and effectively stop law enforcement’s ability to identify and arrest offenders and, ultimately, to protect children. “They are using the guise of privacy to justify their position and in doing so, are continuing to put profit before child protection. It is time their complete loss of social and moral responsibility is highlighted and challenged.” Mr Carr, who is secretary of the UK Children’s Charities’ Coalition on Internet Safety said: “If introduced without the appropriate safeguards that will allow law enforcement to detect and prevent online child sexual abuse, end-to-end encryption threatens to deny justice to huge numbers of children. “Children are major user of social media. A great many use Meta’s platforms, including Facebook Messenger and Instagram Direct. “The design and nature of these platforms make them a perfect space for dangerous people to discover, befriend, groom and sexually abuse children – and if end-to-end encryption is introduced without appropriate safeguards, Meta will be willingly blinding itself to the abuse taking place online. “Their plans are utterly unconscionable – particularly when there are tech solutions out there that enable end-to-end encryption to exist and child sexual abuse to be detected, reported, and justice to be served. “Big tech companies, like Meta, must think again before introducing a blanket roll-out of end-to-end encryption across their platforms. “If they don’t, thousands of children will be at risk, and we will fail to solve the growing problem of online child sexual abuse. Do better Meta – it’s time to prioritise child safety over profit.” I cannot emphasise enough the impact this has on me and other victims of this abuse Rhiannon-Faye McDonald Rhiannon-Faye McDonald, head of advocacy at the Marie Collins Foundation, was herself sexually assaulted at the age of 13 after a predator contacted her online. She said: “To say I am disappointed that Meta is continuing with their plans to roll out end-to-end encryption is an understatement. The measures they say will be in place – using metadata to identify patterns of behaviour rather than content – are not good enough. “This move gives child sex abusers the ability to act undetected on its platforms as Meta will also lose the ability to use technology to detect and remove child sexual abuse images and videos. “As a victim of child sexual abuse myself, where my abuse was documented and shared online by the perpetrator, I cannot emphasise enough the impact this has on me and other victims of this abuse. “I am horrified that the images of my abuse could be infinitely reshared across the globe with no hope of them being blocked or taken down. How is this protecting my privacy?” She said it is “incredibly worrying” that big tech companies “can unilaterally make decisions that limit our ability to protect children”. A Meta spokesperson said: “The overwhelming majority of Brits already rely on apps that use encryption to keep them safe from hackers, fraudsters and criminals. “We don’t think people want us reading their private messages so have spent the last five years developing robust safety measures to prevent, detect and combat abuse while maintaining online security. “We recently published an updated report setting out these measures, such as restricting people over 19 from messaging teens who don’t follow them and using technology to identify and take action against malicious behaviour. “As we roll out end-to-end encryption, we expect to continue providing more reports to law enforcement than our peers due to our industry-leading work on keeping people safe.” Read More Call of Duty launch sparks record traffic on broadband networks Crypto investment fraud warning issued by major bank Council investigating extent of cyber attack that affected website and systems Setback for Ireland as EU legal adviser recommends revisit of Apple tax case Smartphones ‘may be able to detect how drunk a person is with 98% accuracy’ Ireland and Apple await major development in long-running EU tax dispute

2023-11-13 08:26

China's Singles Day festival wraps up with e-commerce giants reporting sales growth

By Casey Hall SHANGHAI (Reuters) -China's largest e-commerce player Alibaba Group said it recorded year-on-year growth over this year's Singles

2023-11-12 21:45

Police investigate 'cyber incident' at Australia ports operator

SYDNEY The Australian Federal Police said on Sunday they were investigating a cybersecurity incident that forced ports operator

2023-11-12 07:45

Why Won’t My Cat Use the Litter Box?

A certified cat trainer suggests reasons why a cat won’t use its litter box—and offers some possible solutions.

2023-11-12 04:24

Meta and Snap must detail child protection measures by Dec. 1, EU says

BRUSSELS Facebook owner Meta Platforms and social media company Snap have been given a Dec. 1 deadline by

2023-11-11 05:55

Exclusive-ICBC hack led to unit temporarily owing BNY $9 billion - sources

NEW YORK Industrial and Commercial Bank of China's hack left its U.S. unit temporarily owing Bank of New

2023-11-11 05:50

Exclusive-ICBC injected capital into U.S. unit after hack - sources

NEW YORK Industrial and Commercial Bank of China (ICBC) injected significant capital into its U.S. unit to help

2023-11-11 04:48

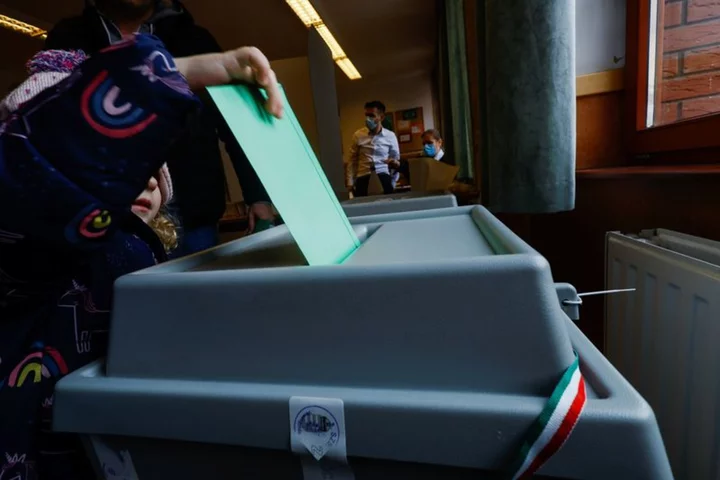

LinkedIn says spy firm targeted Hungarian activists, journalists before 2022 election

By Raphael Satter ARLINGTON, Virginia Private spy firm Black Cube was behind a hidden video campaign that used

2023-11-11 03:28

Humane AI Pin: Much-hyped tech product launches and makes major mistake in its first outing

Humane has launched its AI Pin, one of the world’s most hyped tech products, and it has immediately made a public mistake. The AI Pin has been the subject of speculation promoted by Humane, a company that has remained somewhat mysterious and includes designers and executives who have worked at Apple and Microsoft. The system is intended to be attached to clothing and then makes use of a range of microphones, speakers and a display that can shine onto its owners hand to give information. That information is provided by artificial intelligence systems built on technology from ChatGPT creator OpenAI and Microsoft. The pin costs $699 and will be available later this year. It has been promoted by its president Imran Chaudhri as a response to both the prevalence of phones and the future of mixed-reality headsets, instead aiming to allow people to engage with the world around them. One of the features intended to do that is access to artificial intelligence systems that can be used to get answers to questions. Users can just press the AI Pin and speak into the air, which will then allow the computer to access the internet and show an answer. During its reveal event, executives showed the pin being used to answer one such question. “I can also use it to ask questions, like: when is the next eclipse, and where is the best place to see it?”, representatives said, explaining that it would be answered by “an AI browsing the web, or grabbing knowledge from all over the internet”. The AI Pin is then showed answering by saying that the best place to view the next total solar eclipse, in April 2024, would be Exmouth in Australia or East Timor. But next year’s solar eclipse will in fact be visible in North America, and in fact has been given the name “the Great North American Eclipse”. It will not be at all visible in Australia, and can only be seen in Mexico, the US and Canada. The system may have made the mistake because a total solar eclipse earlier this year was in fact best viewed from Exmouth and East Timor. That eclipse, in April, brought widespread coverage to the small Australian town – and that coverage was presumably used to train the artificial intelligence system that answered the question. Humane did not say which assistant was being used for that answer. The AI Pin is built specifically to call on a number of different assistants depending on what question is asked. The error recalls a similar error made by Google’s Bard chatbot when it was introduced at the beginning of the year. An ad showed Bard being asked about interesting discoveries by Nasa’s James Webb Space Telescope, and replying that it had taken “the very first pictures of a planet outside of our own solar system” – which is not true. At the time, many noted that the error highlighted a central error with large language models. The systems tend to “hallucinate” – or confidently state falsehoods – and have no real way of being able to check whether the information they are given is true. Read More You can finally use one feature of the Apple Vision Pro headset – sort of ChatGPT creator mocks Elon Musk in brutal tweet Call of Duty launch sparks record traffic on broadband networks

2023-11-11 02:48

iPhone update lets public try first ever Vision Pro headset feature – sort of

A new iPhone update brings the first look at one of the key features of Apple’s upcoming Vision Pro headset. iOS 17.2, which is available to developers in an early version now, and is likely to be released later this year for everyone, brings support for spatial video on the iPhone 15 Pro and Pro Max. That will allow people to make videos with depth, that can then be viewed later on the augmented reality headset. Those spatial photos and videos were a key part of Apple’s introduction of the Vision Pro earlier this year. Taking a video is done in much the same way as any other video: users choose spatial video, and will be told that they need to turn the video to be landscape, as well as given warnings about potential low light or being too close to the subject. They can then make the video as normal. The videos will also appear as normal on the iPhone itself. Users can watch them in their Photos app, but there is no preview of the three-dimensional aspect, which must be seen on the headset. Apple announced that spatial video would be available in the new iPhones when they were unveiled in September. Until that point, only the Vision Pro had been announced as a way of taking the videos – leading to fears and some mockery that people would have to be wearing the augmented reality headset during important moments they wanted to capture. The new features are in the second beta of iOS 17.2 to be released to developers. It is likely to come to the public in December. iOS 17.2 also brings the journal app, which was first announced at Apple’s Worldwide Developers Conference in June but did not arrive in the full version of iOS 17. It also adds new widgets, Apple Music features including collaborative playlists, more Memoji options and improvements to the security of iMessage. The iPhone 15 Pro also gets another exclusive feature in that update. When it arrives, it will add a new option for the action button on the side, to allow it to open up translate, in addition to the current options that include the camera and torch. Read More Setback for Ireland as EU legal adviser recommends revisit of Apple tax case Apple just released an iPhone update you should download right now Apple co-founder Steve Wozniak rushed to hospital in Mexico after ‘possible stroke’

2023-11-11 02:27

US and EU Lead Push for COP28 to Back Tripling of Renewables

The US and the European Union are leading a global push for the United Nations’ climate talks to

2023-11-11 02:20

ChatGPT creator mocks Elon Musk’s new AI for ‘dad jokes’ and ‘cringey boomer humour’

Sam Altman, the head of ChatGPT creators OpenAI, has mocked Elon Musk’s entry into the artificial intelligence market. This week, Mr Musk’s xAI company unveiled Grok, another chat-based AI system along the lines of ChatGPT. He claimed that the app was written to be irreverent and funny, and to avoid what he suggested was censorship on other platforms such as ChatGPT. But the creator of that rival hit back at Grok in a tweet that suggested grok “tell[s] jokes like your dad’s dad” and that it traded in “cringey boomer humour”. The system works in a “sort of awkward shock-to-get-laughs sort of way”, he said. Mr Altman’s post showed him programming a system of his own, using a new OpenAI feature, and showed a screen grab of the instructions he had given to the system. He joked that “GPTs can save a lot of effort” in reference to a new feature, named GPTs, which allows people to creat their own versions of his chatbot that include specific and custom characteristics. Mr Musk responded with what appeared to be a quote from his own Grok AI. That response joked that “humour is clearly banned at OpenAI”. The marketing of Mr Musk’s Grok has revolved primarily around the fact that it will answer questions that other systems will refuse, and its tone is more irreverent than rival systems such as ChatGPT and Google’s Bard. When it was launched, for instance, he shared an example of how it will answer “almost anything”, sharing a screenshot of it being asked how to make cocaine. “Grok is designed to answer questions with a bit of wit and has a rebellious streak,” a blog post announcing its launch noted. “Please don’t use it if you hate humour!” Grok is also different from those systems in that it has real-time access to posts and data from Twitter. Other AI firms were using that site to train their models, but Mr Musk has looked to cut them off, arguing that it is causing too much demand on the site. Read More ChatGPT goes offline ChatGPT update allows anyone to make their own personalised AI assistant How Elon Musk’s ‘spicy’ Grok compares to ‘woke’ ChatGPT

2023-11-11 01:56