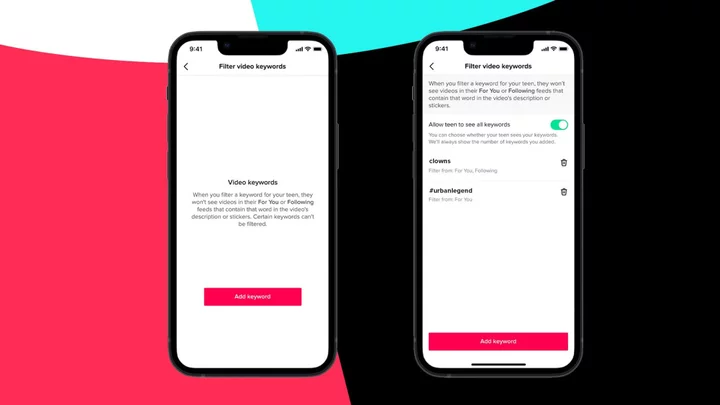

Caregivers can push back against the unpredictable TikTok algorithm even more with expanded content filtering tools, launched today by the social media giant.

Individuals who oversee accounts of users under the age of 18 are now able to limit the kinds of videos that appear on a user's feed using selected keywords via TikTok's Family Pairing page, which lets caregivers and teens customize safety settings and app use.

The tool is an expansion of TikTok's content filtering settings launched in July 2022, which let users manage what they see on their For You Page using keywords and hashtags, based on guidance from the Family Online Safety Institute. Importantly, the keywords selected as inappropriate by caregivers are also visible to teen users, fostering a collaborative dynamic that encourages ongoing communication on social media safety.

SEE ALSO: Asylum-seekers struggle to enter U.S. using CBP One app, says IRC reportThe platform also announced that it would officially convene its anticipated global Youth Council — a forum for teens to share their experiences, recommendations, and feedback for the app — later this year.

"Listening to the experience of teens is one of the most important steps we can take to build a safe platform for teens and their families. It helps us avoid designing teen safety solutions that may be ineffective or inadequate for the actual community they're meant to protect, and it brings us closer to being a strong partner to caregivers as we can better represent teens' safety and well-being needs," wrote Julie de Bailliencourt, TikTok's Global Head of Product Policy, in the platform's announcement.

In March, TikTok announced an automatic 60-minute daily screen time limit for all users under 18, with additional caregiver controls for users under 13. In May, it announced a new $2-million advertising fund for mental health and well-being outreach.

TikTok's efforts join a larger debate on the impact of social media use on childhood well-being. In May, the American Psychological Association (APA) released new guidelines for social media use intended to prevent harm, address adolescent mental health, and foster social media literacy. The recommendations included reasonably monitoring pre-teen and teen social media use and minimizing the use of social media sites as online spaces for comparison.

The company is one of several social media platforms that's come under fire in recent years for content and usage moderation practices which, advocates say, could be exacerbating a worsening mental health crisis and are placing the burden of digital safety on the shoulders of caregivers.

SEE ALSO: 'You're always on': Warnings from the front lines of the teen mental health crisisMeta was sued in 2022 for allegedly exploiting young users for profit after being informed of its platforms' negative effects on users, following a 2021 congressional inquiry into Instagram's impact on teen mental health. A Maryland school district reinvigorated legal attempts earlier this month, suing the parent companies of Instagram, Facebook, TikTok, Snapchat, and YouTube for allegedly "intentionally cultivating" features that have contributed to a mental health crisis among young Americans.

In response to such concerns, TikTok, Meta and other companies have launched a growing lineup of additional parental control measures, including new Meta features announced this week that allow caregivers to monitor their teens' direct messages.

"We're proud that through Family Pairing, we support more than 850,000 teens and their families in setting guardrails based on their individual needs," TikTok's announcement said. "Our work to help create a safe place for teens and families has no finish line."

Want more Social Good stories in your inbox? Sign up for Mashable's Top Stories newsletter today.