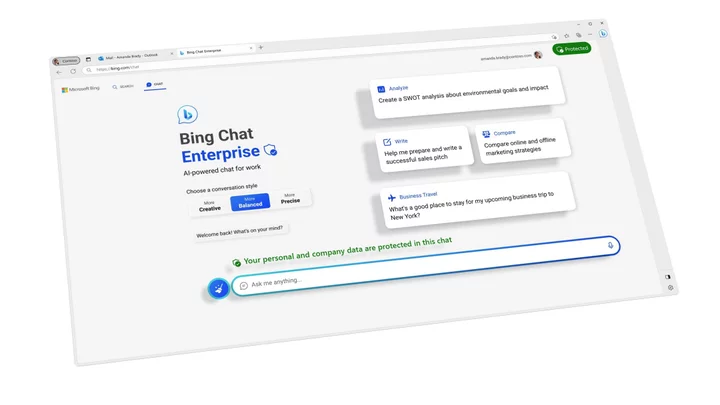

On Wednesday, Microsoft announced a business-friendly version of Bing Chat, so you can use the AI chatbot for work without risking some kind of security breach.

Chatbots like Bing, ChatGPT, and Bard are powerful productivity tools for workers. They can summarize vast amounts of text, generate code, and help brainstorm new ideas. But using AI chatbots for work come with major privacy risks, since the large language models that power the tools potentially use your conversations to improve the model. Chat histories are also saved in the companies' servers.

SEE ALSO: What not to share with ChatGPT if you use it for workThese risks became painfully clear when Samsung workers inadvertently revealed trade secrets by using ChatGPT for debugging code and summarizing notes from private meetings. For this reason, many companies, like financial institutions, Apple, and even Google, have banned or warned the use of ChatGPT for work. In April, OpenAI rolled out the ability to opt out of sharing your chat history with the model to address privacy concerns.

Microsoft touts Bing Chat Enterprise having built-in security features designed to prevent another Samsung debacle. According to the Microsoft announced, chat data within Bing is not saved, is not used to train its models, and Microsoft has "no eyes-on access."

In the announcement, Microsoft also announced pricing for Copilot, its AI-powered tool that's integrated across Word, Excel, PowerPoint, Outlook, and Teams.

For commercial customers, Copilot costs $30 a month per user. That's on top of the existing Microsoft 365 subscription, so it doesn't come cheap. But, as the announcement emphasized, Copilot can summarize meetings, create presentations, help tackle your inbox, and more. If time is money (and your company has money), it might be a worthy trade-off.