Hyosung Innovue Announces New Cajera Pivot Recycling ATM Series

IRVING, Texas--(BUSINESS WIRE)--Jul 25, 2023--

2023-07-25 23:24

SME Education Foundation Scholarship Award Amounts Increased to Break Down Financial Barriers to Manufacturing Careers

SOUTHFIELD, Mich.--(BUSINESS WIRE)--Jun 9, 2023--

2023-06-09 21:16

Is Makarov Returning in Modern Warfare 3?

Yes, Vladimir Makarov is returning in Modern Warfare 3 according to a teaser trailer dropped by Activision. The villain is set to team up with Graves in MW3.

2023-08-09 03:18

Our Top 100 Budget Buys: Affordable, Tested Tech That's Actually Worth It

The dictionary defines "testy" as easily annoyed or bad-tempered. But maybe it should refer to

2023-06-17 21:54

Video showing how babies' faces form is giving people nightmares

The human body is an extraordinary thing – and now, one video is proving just that, while simultaneously giving people nightmares. Childbirth is often regarded as one of nature's most incredible events, but have you ever questioned how a baby's face develops while in the womb? Neither have we. But thanks to the BBC, people are divided about how "beautiful" the process actually is. The simulation shows how the baby's face starts with the philtrum, the area between the bottom of your nose and upper lip. During the episode of Inside the Human Body: Creation, Michael Mosley points out: "Down the centuries, biologists have wondered why every face has this particular feature. What we now know is it is the place where the puzzle that is the human face finally all comes together." The footage then recreates a baby's facial development via an animation, which begins with two holes at the top of the head. It appears as though the features then start to merge, though this is the baby's nostrils. (Fast-forward 32 seconds in to the below video to watch:) Face Development in the Womb - Inside the Human Body: Creation - BBC One www.youtube.com "We've taken data from scans of a developing embryo so we're able to show you for the very first time how our faces don't just grow, but fit together like a puzzle," Mosley continues. "The three main sections of the puzzle meet in the middle of your top lip, creating the groove that is your philtrum." He continues: "This whole amazing process, the bits coming together to produce a recognisable human face, happens in the womb between two and three months. "If it doesn't happen then, it never will." The snippet understandably garnered a mixed response, with one viewer writing: "That was so creepy yet amazing..." Another joked: "Makes me feel better that Brad Pitt and Tom Cruise once looked like space aliens." And a third quipped: "Thank you for the enlightening information and the skin-curdling nightmares." Sign up for our free Indy100 weekly newsletter Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-09-28 18:21

Landmark Google trial opens with sweeping DOJ accusations of illegal monopolization

US prosecutors opened a landmark antitrust trial against Google on Tuesday with sweeping allegations that for years the company intentionally stifled competition challenging its massive search engine, accusing the tech giant of spending billions to operate an illegal monopoly that has harmed every computer and mobile device user in the United States.

2023-09-12 23:23

How to do the Remini AI headshots on TikTok

Another day, another TikTok trend – and this time, the platform is obsessing over a new AI app that creates LinkedIn headshots. The struggle of finding an appropriate corporate photo is real, but now there's a new app called Remini that is seemingly helping thousands of TikTokers solve that problem. One viral clip, which racked up almost 40 million views, brought attention to the app by sharing her 'before' and 'after' images. "Using this trend to get a new LinkedIn headshot," Grace wrote as the on-screen text, before showcasing a string of professional-looking images Remini had created. "Wait that’s such a good idea and you look AMAZING," one person commented, while another added: "The way my jaw dropped." Hundreds more TikTokers were desperate to find out more, which prompted Grace to upload a tutorial to her page. Sign up for our free Indy100 weekly newsletter @gracesplace #CapCut #ai #remini #fypシ All you need to do is: Download the Remini app, and read all of the terms and conditions before using Once downloaded, the AI app will ask you to pay for subscription – but Grace highlighted there's a three day trial to take advantage of Go to the AI image tab, where it will ask you to upload around 10 selfies Select a 'model image' to set the scene. To achieve a corporate look, click 'curriculum' The photos will be ready after a few minutes @gracesplace Replying to @Aidan #remini #fypシ #ai "I love this," one person quipped. "But definitely crying in photographer." Many more struggled to find the 'free trial' option. However, another TikToker chimed in: "$10 a week, but if you think about it… a headshot like this would cost you time and at least $100 for the photographer." Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-07-19 16:55

SoundHound Joins Forces With ChowNow To Ensure Restaurants Never Miss A Call

SANTA CLARA, Calif.--(BUSINESS WIRE)--Aug 29, 2023--

2023-08-29 21:29

Pan-African Approach Needed to Ensure Food Security: New Economy

A pan-African approach is needed to solve the continent’s food-security dilemma given the high cost of developing agricultural

2023-06-14 23:20

These night vision digital binoculars are on sale for under $100

TL;DR: As of May 14, the Mini Dual Tube Digital Night Vision Binoculars are on

2023-05-14 17:50

The Apple Pencil (2nd Generation) is on sale for under £100 this Prime Day

TL;DR: The Apple Pencil (2nd Gen) is on sale for £99 this Prime Day. This

2023-07-11 13:26

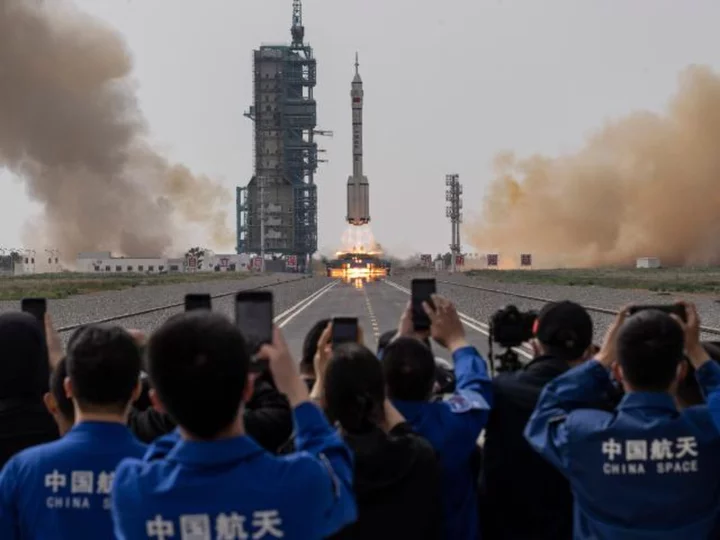

China reveals how it plans to put astronauts on the moon by 2030

Chinese officials on Wednesday unveiled new details about their plans for a manned lunar mission, as China attempts to become only the second nation to put citizens on the moon.

2023-07-13 12:29

You Might Like...

Hackers Contacted Cybersecurity Firm CEO’s Son, Wife in Extortion Attempt

Music firm Universal announces streaming overhaul

Rocket League Rank Distribution: Season 11 Breakdown

Bank of America and Apple Executives Join Chargebacks911 to Drive Rapid Expansion

Justice Department files criminal charges in cases of American tech stolen for Russia, China and Iran

New York deluge triggers flash floods, brings chaos to subways

Save more than $500 on a refurbished iPad Pro

U.S. Army Approves Hypori Halo as Virtual BYOD Enterprise Capability