Pieces of distant, ancient asteroid arrive on Earth from Nasa spacecraft, after travelling billions of miles

A piece of asteroid has arrived on Earth from the other side of the solar system, in a major success for Nasa’s Osiris-Rex mission. The spacecraft has spent years flying to Asteroid Bennu, gathering up a piece of it, and bringing it back home so that it can be studied by researchers. It brings an end to a mission that took seven years, saw it travel 4 billion miles, and cost more than a billion dollars. Scientists hope that study can help reveal how planets formed and evolved, and might shed light on how life itself began. Since Bennu is around 4.5 billion years old, the sample is almost like a look back into the solar system during its early years and Nasa has referred to it as a “time capsule”. Asteroid Bennu is also notable as Nasa’s “most dangerous asteroid”, according to a scale used to measure how much of a hazard a given object poses. It is the first time that Nasa has brought back a piece of an asteroid, and the first time since 2020. It is also the biggest ever to be gathered, at around 250 grams. Nasa sent a team on board helicopters to gather the sample canister, extracting it to ensure that it did not become contaminated by the environment. Since the sample was directly from the asteroid, it will not have any trace of material from the Earth on it, unlike those that fall to Earth. That sample will be distributed between 200 people at 38 institutions across the world, including those in the UK. The Osiris-Rex mission left Earth in September 2016, and arrived at the asteroid in October 2018. It gathered samples in October 2020, and then left the asteroid in April 2021. Since then, both the sample and the spacecraft have been returning back from the other side of the solar system to Earth. The spacecraft then dropped off the sample to return home, while Osiris-Rex will carry on to study another asteroid called Apophis, where it will arrive in 2029. Apophis is also notable for its danger: at times, it has challenged Bennu at the top of the league table of most dangerous objects. But recent research has suggested that Apophis poses less of a danger. Ashley King, UKRI future leaders fellow, Natural History Museum, said: “Osiris-Rex spent over two years studying asteroid Bennu, finding evidence for organics and minerals chemically altered by water. “These are crucial ingredients for understanding the formation of planets like Earth, so we’re delighted to be among the first researchers to study samples returned from Bennu. ‘We think the Bennu samples might be similar in composition to the recent Winchcombe meteorite fall, but largely uncontaminated by the terrestrial environment and even more pristine.” Dr Sarah Crowther, research fellow in the Department of Earth and Environmental Sciences at the University of Manchester, said: “It is a real honour to be selected to be part of the Osiris-Rex sample analysis team, working with some of the best scientists around the world. “We’re excited to receive samples in the coming weeks and months, and to begin analysing them and see what secrets asteroid Bennu holds. “A lot of our research focuses on meteorites and we can learn a lot about the history of the solar system from them. “Meteorites get hot coming through Earth’s atmosphere and can sit on Earth for many years before they are found, so the local environment and weather can alter or even erase important information about their composition and history. “Sample return missions like Osiris-Rex are vitally important because the returned samples are pristine, we know exactly w Read More Pieces of a distant asteroid are about to fall to Earth Nasa to return largest asteroid sample ever as UK helps with research Astronomers find abundance of Milky Way-like galaxies in early universe Pieces of a distant asteroid are about to fall to Earth Nasa to return largest asteroid sample ever as UK helps with research Astronomers find abundance of Milky Way-like galaxies in early universe

2023-09-24 23:26

UK Tightens Online Safety Bill Again as It Nears Final Approval

The UK made last-minute amendments toughening up its sweeping, long-awaited Online Safety Bill following scrutiny in Parliament’s upper

2023-06-30 09:00

Canva Celebrates 10 Years of Empowering the World to Design

SYDNEY--(BUSINESS WIRE)--Aug 28, 2023--

2023-08-28 22:29

Novogratz’s Galaxy Digital Turns Profitable on Crypto Markets Rebound

Michael Novogratz, the founder of crypto financial services firm Galaxy Digital Holdings Ltd., said his company is moving

2023-05-09 22:57

NBA 2K24 Mamba Mentality Guide: How to Complete

To complete the Mamba Mentality Quest in NBA 2K24, players must unlock and complete the Decelerator, Minimizer, and Second Chance Quests to earn Mamba Mentality.

2023-09-09 02:20

Africa’s Biggest Mobile Firm Plans New $320 Million Fiber Cable

MTN Group Ltd., Africa’s biggest mobile-phone operator, plans to build a $320 million inland fiber cable to connect

2023-05-16 00:26

Two US lawmakers raise security concerns about Chinese cellular modules

By David Shepardson WASHINGTON (Reuters) -Two U.S. lawmakers on Tuesday asked the Federal Communications Commission (FCC) to address questions about

2023-08-09 02:28

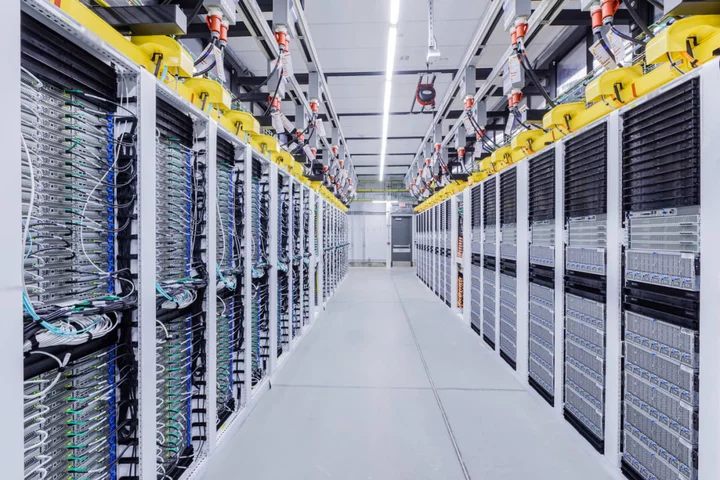

US Considers Limits on Cloud Computing For China

The US is considering restrictions on China’s access to computing over the Internet, or the cloud, as part

2023-07-06 06:54

WeVideo Appoints Kevin Knight as Chief Executive Officer

MOUNTAIN VIEW, Calif.--(BUSINESS WIRE)--Aug 10, 2023--

2023-08-10 23:22

Core Scientific Announces May 2023 Production and Operations Updates

AUSTIN, Texas--(BUSINESS WIRE)--Jun 5, 2023--

2023-06-05 21:26

Drone Startup Shield AI Valued at $2.5 Billion in New Funding Round

Shield AI, a startup that makes autonomous drone technology for military applications, is raising $150 million from investors,

2023-09-14 09:24

Stocks Could Jump Soon. This Tech Name Is a Good Way to Play a Bounce.

Focus on companies with powerful fundamentals. Microsoft offers exposure to two important themes: cloud computing and artificial intelligence.

2023-11-01 13:24

You Might Like...

New Warzone Season 4 Vondel Gulag Detailed

Did xQc want to keep things with Fran 'private'? Streamer annoyed after relationship was 'forced out'

Every Platform You Can Play Lethal Company

Apple Taps New Chief for Team Developing Watch’s Glucose Tracker

Another Three Rail Transit Lines in China Operate with Hytera Communication Systems

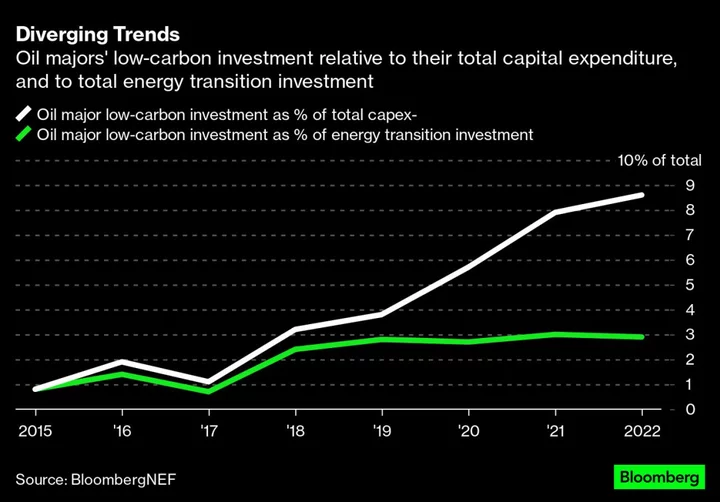

Big Oil’s Pullback From Clean Energy Matters Less Than You Might Think

Unity Says Goodbye To Company CEO After Backlash Over Game Install Fees

Skip subscription fees with this $30 Microsoft Office for Mac lifetime license