Italy Curbs China Influence Over Formula One Tiremaker Pirelli

Italy’s government stepped in to limit the influence of China’s Sinochem over Formula One supplier Pirelli SpA, citing

2023-06-17 03:50

Amazon Prime Day 2023 Has Arrived—and Here Are All the Best Deals on Headphones, Robot Vacuums, and More

These Amazon Prime Day 2023 deals can help you save big on top-rated products from Apple, iRobot, and other leading brands.

2023-07-11 23:17

Hong Kong protest anthem's online presence fades as govt seeks total ban

By Jessie Pang HONG KONG (Reuters) -Various versions of the pro-democracy protest anthem "Glory to Hong Kong" were unavailable on

2023-06-14 20:56

Sony Has Made a 'Binding Agreement' To Keep Call of Duty on PlayStation Following Activision Blizzard Acquisition

Call of Duty will remain available on PlayStation. Sony has made a “binding agreement” with

2023-07-17 02:23

The reason why people really did look older in the past

Back in the day, it’s said that people looked a lot older earlier in life than they do now. As it turns out, there’s a few reasons why. A video essay exploring the phenomenon from Vsauce posits a few explanations why we notice people looking older at a younger age in old footage and photographs. For one, the improvements in standards of living and advancements in healthcare over the years offer an obvious factor. There’s also subconscious bias surrounding fashions from years gone by and their connection with older generations. Sign up to our free Indy100 weekly newsletter However, a study from 2018 also explored how biological ageing has changed in a short space of time. Did People Used To Look Older? www.youtube.com It found that human beings are actually biologically “younger” now than ever when it comes to changes in things like blood pressure – so there’s an actual physical difference between the generations that explains why people looked older sooner back in the day. The study explained that this is down to factors such as a fall in smoking, reading: "Over the past 20 years, the biological age of the U.S. population seems to have decreased for males and females across the age range. "However, the degree of change has not been the same for men and women or by age. Our results showed that young males experienced greater improvements than young females. This finding may explain why early adult mortality has decreased more for males than females, contributing to a narrowing of the gender mortality gap. Additionally, improvements were also larger for older adults than they were for younger adults." Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-07-16 19:57

Biggest-ever simulation of the universe could finally explain how we got here

It’s one of the biggest questions humans have asked themselves since the dawn of time, but we might be closer than ever to understanding how the universe developed the way it did and we all came to be here. Computer simulations are happening all the time in the modern world, but a new study is attempting to simulate the entire universe in an effort to understand conditions in the far reaches of the past. Full-hydro Large-scale structure simulations with All-sky Mapping for the Interpretation of Next Generation Observations (or FLAMINGO for short), are being run out of the UK. The simulations are taking place at the DiRAC facility and they’re being launched with the ultimate aim of tracking how everything evolved to the stage they’re at now within the universe. The sheer scale of it is almost impossible to grasp, but the biggest of the simulations features a staggering 300 billion particles and has the mass of a small galaxy. One of the most significant parts of the research comes in the third and final paper showcasing the research and focuses on a factor known as sigma 8 tension. This tension is based on calculations of the cosmic microwave background, which is the microwave radiation that came just after the Big Bang. Out of their research, the experts involved have learned that normal matter and neutrinos are both required when it comes to predicting things accurately through the simulations. "Although the dark matter dominates gravity, the contribution of ordinary matter can no longer be neglected, since that contribution could be similar to the deviations between the models and the observations,” research leader and astronomer Joop Schaye of Leiden University said. Simulations that include normal matter as well as dark matter are far more complex, given how complicated dark matter’s interactions with the universe are. Despite this, scientists have already begun to analyse the very formations of the universe across dark matter, normal matter and neutrinos. "The effect of galactic winds was calibrated using machine learning, by comparing the predictions of lots of different simulations of relatively small volumes with the observed masses of galaxies and the distribution of gas in clusters of galaxies," said astronomer Roi Kugel of Leiden University. The research for the three papers, published in the Monthly Notices of the Royal Astronomical Society, was undertaken partly thanks to a new code, as astronomer Matthieu Schaller of Leiden University explains. "To make this simulation possible, we developed a new code, SWIFT, which efficiently distributes the computational work over 30 thousand CPUs.” Sign up for our free Indy100 weekly newsletter How to join the indy100's free WhatsApp channel Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings

2023-11-16 23:53

Early adopters in Mexico lend their eyes to global biometric project

By Anna Portella MEXICO CITY Eager early adopters recently descended upon a Mexico City cafe where their eyes

2023-08-08 21:45

JFrog Software Supply Chain Platform Delivers 393% ROI According to Total Economic Impact Study

SUNNYVALE, Calif.--(BUSINESS WIRE)--May 24, 2023--

2023-05-24 21:16

WhatsApp update stops people having to come up with good names for groups

WhatsApp will finally let people create group chats without having to come up with a clever name for them – or any name at all. Users will instead be able to just make a group and then have that group name itself after the people inside of it. WhatsApp suggested that the tool will be useful when “you need to create a group in a hurry, or you don’t have a group topic in mind”. It will be available for group chats with up to six people in them. The group names will be changed dynamically, depending on who is in the group. The group name will display differently for each user in it, depending on how they have people saved in their phone. If someone is added to a group with people who don’t have that person saved, then their phone number will show instead. Mark Zuckerberg announced the feature on Facebook. “Making it simpler to start WhatsApp groups by naming them based on who’s in the chat when you don’t feel like coming up with another name,” he wrote, sharing a picture of how the new groups will look. The feature is rolling out “globally over the next few days”, Meta said. It is one of a number of small tweaks that have been added to WhatsApp in recent weeks. Most recently, it fixed a major frustration that meant that pictures would be shrunk when they were sent within a group. The company is also quietly working on other features, including the addition of generative AI to create new stickers just by describing them. Read More WhatsApp update finally stops it ruining your photos WhatsApp rolls out AI tool for creating custom art Jury finds teenager responsible for computer hacking spree

2023-08-23 22:26

15 Obscure Words That Are Pure Fire

The world is heating up, and things are often on fire—literally. As we do what we can to squelch the flames, check out some old and obscure words people of the past used when they wanted to talk about all things fire.

2023-08-08 20:21

Yelp sues Texas to defend its labeling of crisis pregnancy centers

Yelp is suing Texas to ensure it can continue to tell users that crisis pregnancy centers listed on its site do not provide abortions or abortion referrals, opening a new front in the fight between states and the tech industry over abortion restrictions.

2023-09-28 21:59

Caltech reaches 'potential settlement' in Apple, Broadcom patent case

By Blake Brittain The California Institute of Technology has reached a "potential settlement" in a high-stakes patent infringement

2023-08-11 07:52

You Might Like...

Musk Says Tesla to Spend Over $1 Billion on Dojo Supercomputer

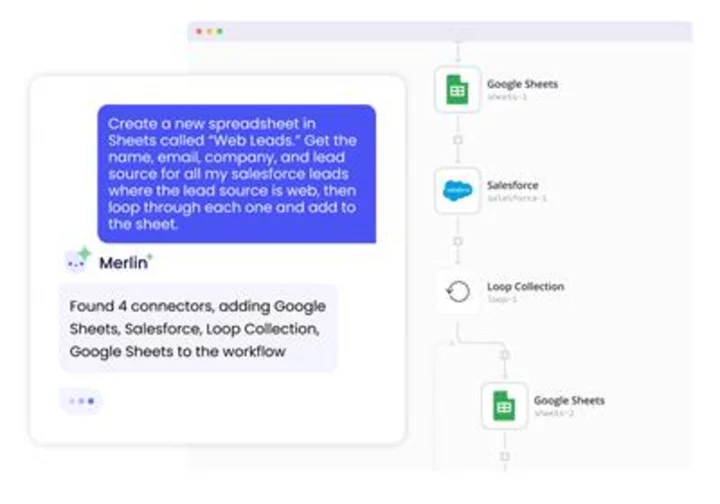

Tray.io Unveils Merlin AI to Instantly Transform Large Language Model Outputs Into Complete Business Processes

Twitter Resumes Paying Google Cloud, Patching Up Relationship

The Grid Must Grow Quickly to Achieve California’s Net-Zero Goal by 2045

Sasol Slumps as It Flags Profit Hit From S. Africa Snarl-Ups

Mobileye appoints insider Rojansky as CFO

Hyundai, Kia to adopt Tesla EV-charging standard from 2024 in US

Pokimane fires back at claims of Kick's domination amidst xQc's game-changing deal: 'Kick makes Twitch money'