Former LG and GM Executive Denise Gray Joins Liminal as Strategic Advisor

EMERYVILLE, Calif.--(BUSINESS WIRE)--Jul 10, 2023--

2023-07-10 22:20

Call of Duty: Modern Warfare III comes to life at star-studded launch event

Call of Duty: Modern Warfare III is out on now on all platforms.

2023-11-10 21:49

Cadence Unveils Joules RTL Design Studio, Delivering Breakthrough Gains in RTL Productivity and Quality of Results

SAN JOSE, Calif.--(BUSINESS WIRE)--Jul 13, 2023--

2023-07-14 08:54

Amazon corporate workers plan walkout next week over return-to-office policies

Some Amazon corporate workers have announced plans to walk off the job next week over frustrations with the company's return-to-work policies, among other issues, in a sign of heightened tensions inside the e-commerce giant after multiple rounds of layoffs.

2023-05-24 05:25

Naruto x Boruto Ultimate Ninja Storm Connections: Everything We Know so Far, Is There a Release Date?

There is no set release date for the Naruto x Boruto Ultimate Ninja Storm Connections game, but it is definitely going to be released sometime this year.

2023-05-23 23:29

Sega Pulls Back From Blockchain Gaming as Crypto Winter Persists

Sega Corp., the gaming studio once regarded among the staunchest advocates of blockchain gaming, is pulling back from

2023-07-07 06:17

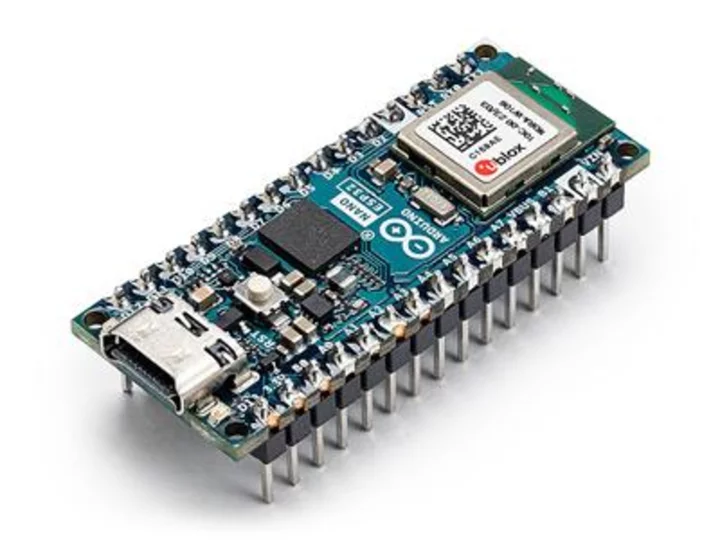

Arduino Introduces the Nano ESP32, Bringing the Popular IoT Microcontroller into the Arduino Ecosystem

AUSTIN, Texas & CHICAGO--(BUSINESS WIRE)--Jul 17, 2023--

2023-07-17 20:21

Mysterious yellow glass found in Libyan desert 'caused by meteorite', say scientists

A meteorite which smashed into earth 29m years ago may be behind a strange yellow glass found in a certain part of the desert in southeast Libya and southwestern Egypt, according to researchers. The Great Sand Sea Desert stretches over about 72,000 square kilometres across the two countries, and is the only place where the mysterious yellow material is found on Earth. Researchers first described it in a 1933 scientific paper, calling it Libyan desert glass. Mineral collectors have long valued it for its beauty and mysterious qualities – and it was even found in a pendant in Egyptian pharaoh Tutankhamun’s tomb. The origin of the glass has been a mystery for centuries, but researchers writing in the journal De Gruyter used new advanced microscopy technology to get answers. Elizaveta Kovaleva, a lecturer at the University of the Western Cape, wrote that the glass was caused by “the impact of a meteorite on the Earth's surface”. Writing in The Conversation, she said: “Space collisions are a primary process in the solar system, as planets and their natural satellites accreted via the asteroids and planet embryos (also called planetesimals) colliding with each other. These impacts helped our planet to assemble, too.” She said: “We studied the samples with a state-of-the-art transmission electron microscopy technique, which allows us to see tiny particles of material – 20,000 times smaller than the thickness of a paper sheet. “Using this super-high magnification technique, we found small minerals in this glass: different types of zirconium oxide (ZrO₂).” One of the types of this mineral found in the glass can only form at temperatures between 2,250 celsius and 2,700 celsius. Toasty. Kovaleva said: “Such conditions can only be obtained in the Earth's crust by a meteorite impact or the explosion of an atomic bomb.” However, she wrote, there are just as many questions as there are answers. The nearest known meteorite craters are too far away and too small to be the cause of that much glass all concentrated in one part of the world. “So, while we've solved part of the mystery, more questions remain. Where is the parental crater? How big is it – and where is it? Could it have been eroded, deformed or covered by sand?” Safe to say, the scientists will keep on looking until they have the answers. How to join the indy100's free WhatsApp channel Sign up to our free indy100 weekly newsletter Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-12-01 02:17

Toyota and LG Energy sign battery supply agreement to power EVs

(Reuters) -Toyota Motor and LG Energy Solution signed a supply agreement for lithium-ion battery modules for use in the Japanese

2023-10-05 03:17

FIS Named Top 200 Fintech Company for Digital Banking Solutions in Inaugural CNBC Ranking

JACKSONVILLE, Fla.--(BUSINESS WIRE)--Aug 11, 2023--

2023-08-11 20:22

Cathie Wood Seeks Tech Gains in Meta, TSMC After Dumping Nvidia

Cathie Wood’s funds have reentered Meta Platforms Inc. and Taiwan Semiconductor Manufacturing Co. after nearly a year and

2023-06-13 18:18

How to watch Australian Netflix for free

SAVE 49%: ExpressVPN is the best service for unblocking streaming sites. A one-year subscription to

2023-07-27 12:26

You Might Like...

Charles Martinet, the voice of Mario, bids farewell to role after 30 years

Australia Agrees on Pared Deal for Multi-Billion Undersea Cable

Indian Refiner Seeks First Ethanol From Bamboo as Demand Swells

Japan auto show returns, as industry faces EV turning point

Experts unravel mystery of the Pokémon episode that hospitalised hundreds of kids

Apple could be dropping leather from iPhone cases and Watch bands, reports claim

Biden to Announce $60 Million Enphase Energy Investment

Amber Heard's Spanish Escapade: Embracing Madrid's charm while keeping mum on the Johnny Depp saga