This mini docking station for Nintendo Switch is under $20

TL;DR: As of August 22, you can get the Mini Docking Station for Nintendo Switch

2023-08-22 17:25

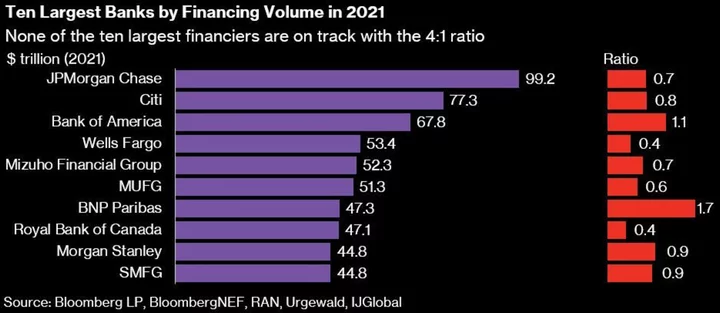

Barclays, Morgan Stanley Lead Banks Nearing CO2 Disclosure Deal

A group of banks led by Barclays Plc and Morgan Stanley is working on a compromise deal to

2023-05-17 02:27

Milwaukee bankruptcy avoidance plan up for approval in Wisconsin Legislature

A plan to prevent Milwaukee from going bankrupt is expected to win bipartisan approval in the Wisconsin Legislature

2023-06-14 12:15

'Below Deck Sailing Yacht' Season 4: Who is Suginia Jones? Primary charter guest gets 'highest level of service'

Primary charter guest Suginia Jones and her friends will be treated to Bravo's 'Below Deck Sailing Yacht's service

2023-06-13 10:26

Chinese livestreamers set their sights on TikTok sales to shoppers in the US and Europe

Chinese livestreamers have set their sights on selling to TikTok shoppers in the U.S. and Europe

2023-07-19 12:15

Why did Keemstar accuse Pokimane of having a boyfriend? 'That’s so fake & pathetic!'

Keemstar accused Pokimane of pretending to be single so that 'sad lonely guys' will donate money to her Twitch stream

2023-05-30 15:54

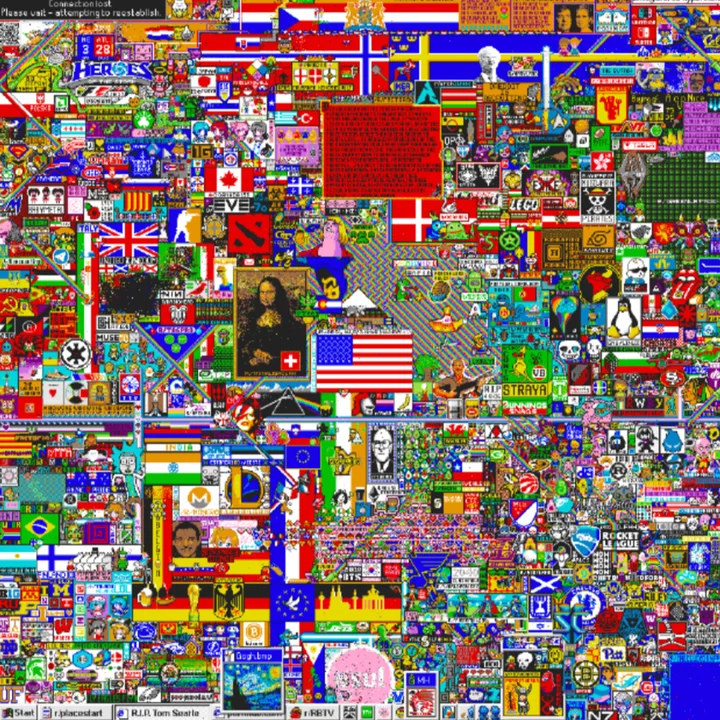

Reddit Place experiment immediately covered in grotesque messages

Reddit users have hijacked a collaborative experiment launched by the company within minutes of its launch. The third edition of Reddit Place – a 1-million-pixel online canvas that allows any user to choose the colour of an individual pixel – launched on Thursday amid ongoing protests against the platform’s management. Communities, or sub-Reddits, quickly organised to post explicit messages aimed at Reddit’s chief executive on various sections of the canvas. In the centre of r/Place, a giant sign appeared within minutes reading, “Fuck Spez”, referring to Reddit CEO Steve ‘Spez’ Huffman. Other sections included graffiti scrawled with the same message, while another featured the text “Never forget what was stolen from us” – referring to the third-party apps that shut down in the wake of API changes to the site. Reddit was forced to push back the experiment several times in efforts to avoid coinciding with the worst of the protests, which at one stage saw thousands of high profile sub-Reddits go dark. Reddit acknowledged the timing of the latest social experiment, adding the tagline: “Right place, wrong time.” The Independent has reached out to Reddit for further comment on the latest protests. Reddit Place is set to continue for the next four days, allowing users to contribute to its evolving creation. Previous editions featured flags, cartoon characters, popular memes and even works of art. One nihilistic group called The Black Void was able to take over vast swathes of the 2017 Reddit Place Canvas with black pixels. The original concept of Reddit Place was intended to “enable humans to communicate and collaborate in ways they have never been able to before”, according to creator Josh Wardle, who went on to create the popular word game Wordle. “My hope is that the success and collaborative nature of projects like Place will encourage other internet companies to take some more risks when exploring ways that their users can interact,” he said at the time. Read More The Reddit blackout, explained: Why thousands of subreddits are protesting third-party app charges Reddit CEO tells employees ‘this will pass’ in response to major chaos at site Netflix kills its cheapest plan without ads Netflix’s password sharing crackdown is going much better than people expected Stolen ChatGPT accounts for sale on the dark web

2023-07-21 01:16

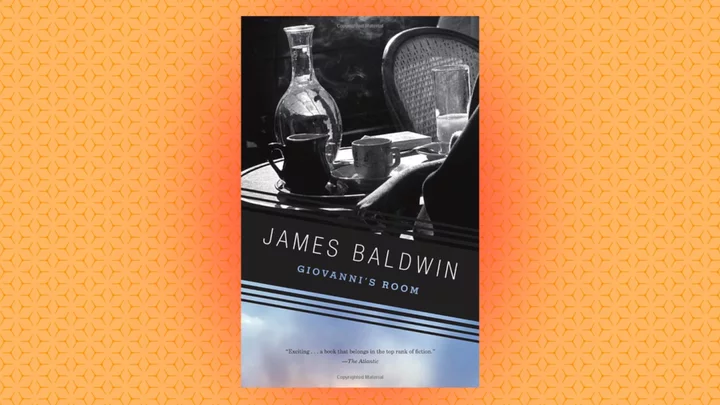

10 Facts About James Baldwin’s ‘Giovanni’s Room’

James Baldwin's novel 'Giovanni’s Room' was rejected by editors and publishers before it was eventually released in 1956.

2023-06-26 20:21

U.S. Supreme Court rejects affirmative action in university admissions

By Andrew Chung and John Kruzel The U.S. Supreme Court on Thursday struck down race-conscious admissions programs at

2023-06-30 00:20

Exclusive-ICBC injected capital into U.S. unit after hack - sources

NEW YORK Industrial and Commercial Bank of China (ICBC) injected significant capital into its U.S. unit to help

2023-11-11 04:48

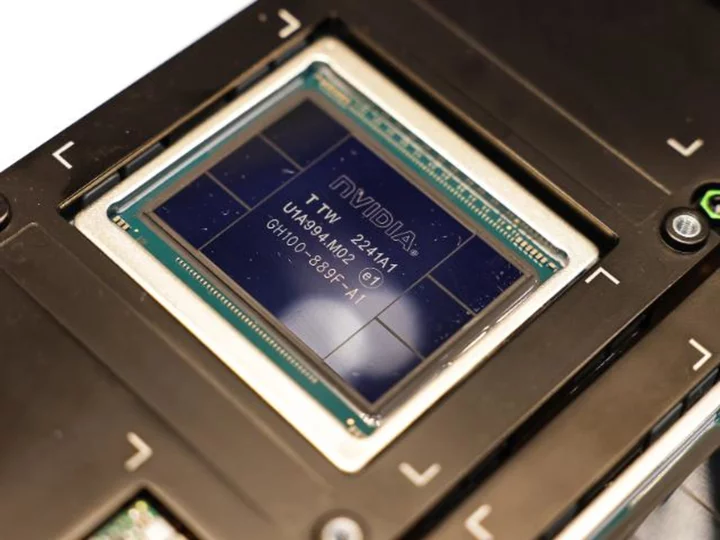

Asus to Sell Nvidia AI Servers You Can Install in Your Office

Taiwan’s Asustek Computer Inc. plans to introduce one of the first services that lets companies tap into the

2023-05-30 08:46

Krach Institute Bolsters Tech Diplomacy Ties with Taiwan

WEST LAFAYETTE, Ind.--(BUSINESS WIRE)--Jun 20, 2023--

2023-06-20 19:20

You Might Like...

Every Gram Counts: SCHOTT Launches Lightweight Microelectronic Packages for Aerospace

A Maker of Plant-Based Spreads Is Catching Up on Climate Goals

ChatGPT’s Riskiness Splits Biden Administration on EU’s AI Rules

Allison Transmission and Biffa Honored With Best Fleet Management Award for Partnership to Reduce Fuel Consumption and Emissions

Wall Street’s AI Gambit Fuels Call for US Congressional Scrutiny

The big bottleneck for AI: a shortage of powerful chips

One Scoop of the World’s Most Expensive Ice Cream Will Set You Back Nearly $7000

Midtown Manhattan Is Literally New York’s Hottest Neighborhood