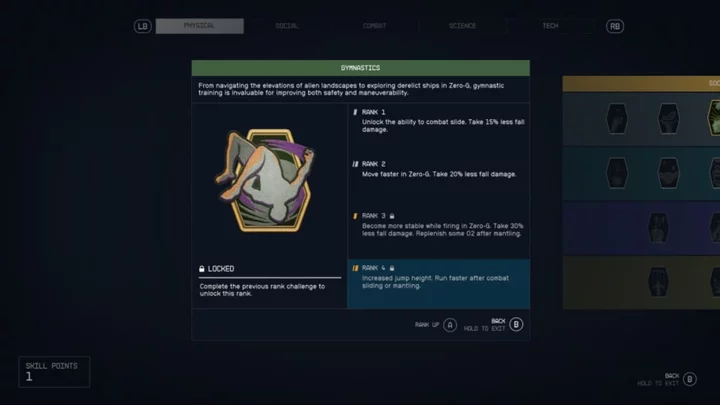

How to Unlock and Use Combat Slide in Starfield

Starfield players can unlock the Combat Slide mechanic by leveling up the Gymnastics perk in the Physical skill tree.

2023-09-01 04:23

Germany in intensive talks with Intel on chip plant - econ ministry

BERLIN Germany is in intensive talks with Intel on plans to set up a new chip-making complex on

2023-06-16 18:50

NFL Network and NFL RedZone will be offered direct to consumer on 'NFL+' service

The NFL is making additional moves to reach more fans with direct-to-consumer offerings

2023-08-11 03:49

Tinder Offers $500-a-Month Subscription to Its Most Active Users

Tinder has rolled out an ultra-premium subscription tier to its dating app users, charging $499 per month to

2023-09-23 02:58

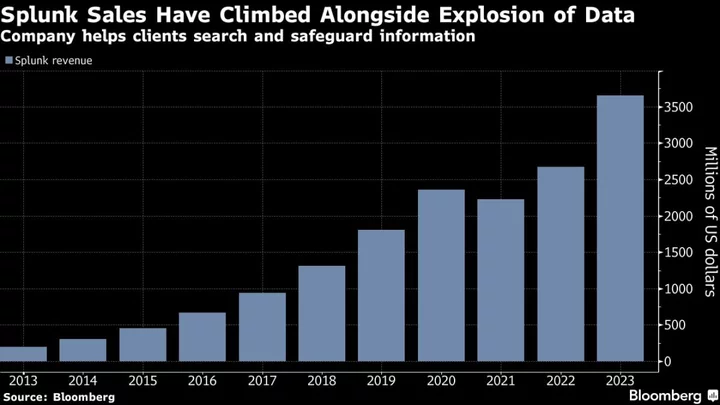

Cisco is Buying Splunk for $28 Billion. Here's What Splunk Does

If the deluge of data in the modern world is increasingly overwhelming, cybersecurity company Splunk claims to have

2023-09-22 03:15

How Much Do All the Streaming Services Cost? Too Much.

Pricing the streaming options is a headache.

2023-08-11 01:58

iPhone 15 may see major design changes and a slight price increase this year

It's hard to believe, but the September iPhone event is only weeks away. With that,

2023-07-31 01:20

The Future of Data: Options Shines as Industry Leader in Global Delivery and Seamless 100Gb OPRA Data Feed Migrations

LONDON & NEW YORK & HONG KONG--(BUSINESS WIRE)--Sep 7, 2023--

2023-09-07 19:00

Elaborate 'Entrance to Hell' discovered underneath a church

Just when you thought 2023 was already going pretty badly, an “entrance to the underworld” has been found under a Mexican church - so, that can't be a good omen. It is, however, a very interesting find: the ancient structure was once believed to be an opening to hell and it was discovered in the site of Mitla near Oaxaca. It consists of a labyrinth leading underground used frequently by the Zapotec culture, who lived in the area for around 2200 years until the Spanish conquests in 1521. While the structure has its origins much earlier, the site was expanded by the Zapotecs and it was used extensively until a Church was later built over it after they left the area. Sign up to our free Indy100 weekly newsletter Around the late 16th century CE, after the Spanish had welcomed themselves to the Americas, a Catholic church and other structures were plonked on top of the site. Traditionally, the ancient Zapotecs believed the ruin to be a doorway to the world of the dead, and it’s thought that the entrance to the passages could be through the main altar of the church. Teams from the Mexican National Institute of History and Anthropology (INAH), the National Autonomous University of Mexico (UNAM), the Association for Archaeological Research and Exploration and the ARX Project all collaborated on the findings. They used geophysical scanning to uncover the complex of tunnels. However, perhaps the most significant discovery was an area measuring around 16 to 26 feet below the ground which could be a large chamber. It’s an exciting development, and as only the first round of surveys has taken place this is just the beginning. Project Lyobaa: Revealing the Underworld of Mitla, Oaxaca www.youtube.com The ARX Project, one of the grounds behind the discovery, released an announcement saying: “In 1674, the Dominican father Francisco de Burgoa described the exploration of the ruins of Mitla and their subterranean chambers by a group of Spanish missionaries. Burgoa’s account speaks of a vast subterranean temple consisting of four interconnected chambers, containing the tombs of the high priests and the kings of Teozapotlán. “From the last subterranean chamber, a stone door led into a deep cavern extending thirty leagues below ground. This cavern was intersected by other passages like streets, its roof supported by pillars. According to Burgoa, the missionaries had all entrances to this underground labyrinth sealed, leaving only the palaces standing above ground,” it continued. Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-07-06 00:00

'Super Mario Bros. Wonder' is about being nice to people on the internet

Super Mario Bros. Wonder isn't the first Mario game with online play, but it might

2023-09-01 02:24

Time to Buy? PC Prices Fall Amid Decreasing Demand

After experiencing a pandemic sales boom, the PC market is facing tough times due to

2023-06-15 00:54

Best AT&T Labor Day Phone Deals: Apple iPhone 14 Pro from $0 Per Month With Select Trade-in

Getting a little tired of your phone? Thinking about trading up for something a little

2023-09-01 22:19

You Might Like...

European Union commissioner blasts X over disinformation track record

Mario vs. Donkey Kong Release Date

Zoom CEO raises eyebrows by saying people need to go back to the office

Alienware x14 R2 Review

Indian glacial lake that flooded was poised to get early warning system

Upgrade your listening experience with Beats Studio3 headphones for $180 off

SiTime Transforms Precision Timing with New Epoch Platform

Wall Street’s Only Research Firm With ‘Sell’ on Nvidia Gives In