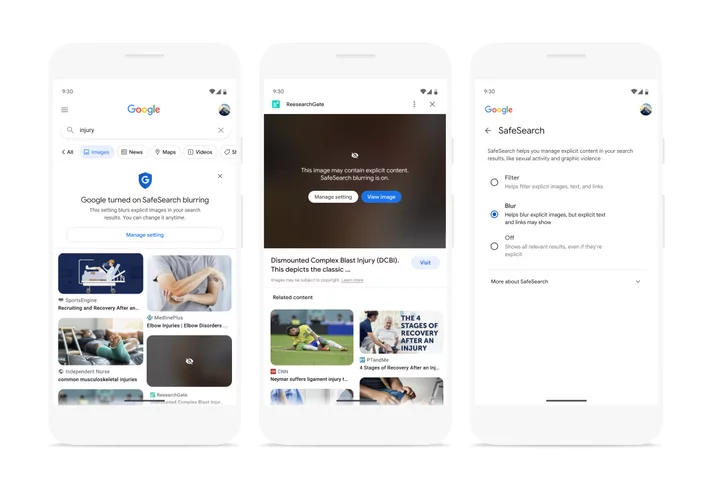

Google is officially releasing its new SafeSearch setting to all users this month, a feature that can filter explicit images (including gore and violence, or graphic adult content) that populate in Search results. Now, Google Search will automatically flag and blur such content for everyone, as a default setting for users globally.

The feature started as a parental controls option designed to help protect users monitored under family accounts from inadvertently encountering explicit imagery on Search. In 2021, Google expanded its parental controls features on Family Link (Google's family safety hub) to automatically turn on SafeSearch for users under 18. The setting filtered out any explicit content appearing in Search results, and bolstered additional efforts to make children's online experience safer. In a February update, Google announced a future blurring option that would apply to all searches, even if SafeSearch settings weren't applied to an account.

SEE ALSO: Apple confirms Screen Time bug in Parental ControlsNow, the SafeSearch blur option will be a standard part of the Google Images experience. The setting can still be adjusted or turned off in account settings at any time, unless a guardian or school network administrator has locked the setting, Google explains. A parent or caregiver can manage child accounts in the Family Link app, and administrators can manage SafeSearch settings for users under the age of 18 who are signed in to a Google Workspace for Education account or associated with a K-12 institution.

In addition to the new SafeSearch function, Google is also making it easier to access parental controls. Users can type in any relevant search phrase — such as "google parental controls" or "google family link" — and an information box will appear with information on how to access and manage controls.

Credit: GoogleWant more Social Good stories in your inbox? Sign up for Mashable's Top Stories newsletter today.