TOKYO--(BUSINESS WIRE)--Aug 16, 2023--

NTT Corporation (NTT) in collaboration with the Research Institute of Electrical Communication, Tohoku University and CASA (Cyber Security in the Age of Large-Scale Adversaries) at Ruhr University Bochum has developed a dedicated cache random function to eliminate the vulnerability caused by delay differences with the cache which is generated in the event of acquiring and updating data between CPU memories. This research contributes to the realization of a highly secure CPU that prevents information leakage due to cache attacks.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20230816297198/en/

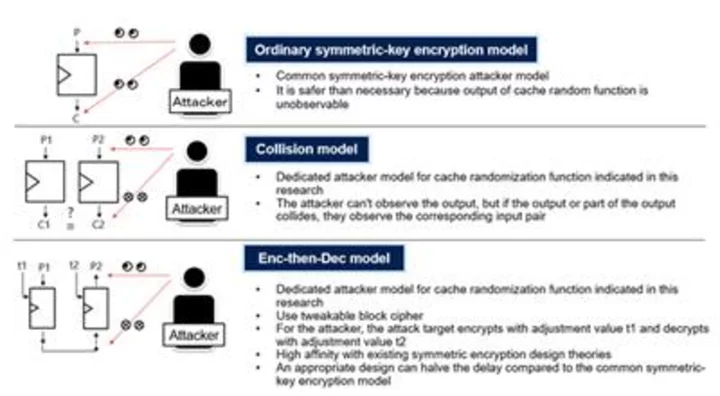

Figure 1: Conventional symmetric-key encryption model and cache randomization function specialized model (Photo: Business Wire)

NTT designed and proposed a Secure Cache Randomization Function (SCARF) for randomization of cache index and formulated what type of function is suitable for randomizing of cache index [1] by providing design guidelines for randomization of cache function which formulated appropriate random function. This paper will be accepted and presented at USENIX Security '23※ in Anaheim which will be held from August 9 th to August 11 th, 2023.

Key Points:

- Modeling attackers to perform cache attacks

- Design of a concrete function SCARF dedicated to cache index randomization

- An efficient and secure design theory against modeled attackers is realized using a tweakable block cipher 2

Background of Research:

Current CPU introduces cache memory to reduce impact of delay required to transfer data between CPU memories by accelerating on subsequence references by placing used data near the CPU. Although data referred once can be referred at high speed from the next time which also makes it available to attackers. These attacks that exploit information are called a cache attack which causes a real vulnerability and countermeasures are needed. Among other things, contention-typed cache attacks resulting from a cache scramble between the target program and the attack program are recognized as a real threat with fewer prerequisites for attackers.

Randomization of cache index is a promising way for countermeasure of contention-based cache attacks. The randomization is thought to be impossible for an attacker to exploit the cache by not being able to determine the target’s cache index used by an address, but it has not been known what level of implementation is necessary and sufficient to achieve randomization.

For example, encryption with block ciphers [4], a type of symmetric-key ciphers [3], is considered as a candidate for a random function. However, block ciphers are originally a technique to ensure confidentiality. To be specific, block ciphers strive to be secure in an environment where an attacker can observe and manipulate all of the input and output and are overqualified for use in cache random function where the output cannot be observed.

Research Results:

In this research, NTT first investigated what the attacker can actually do with a cache random function, and then worked on a design attack model for specified cache randomization function that reflected the attacker's capabilities appropriately. Specifically, NTT introduced a model of Enc-then-Dec that encrypts with the adjustment value t1 and then decrypts with the adjustment value t2, using a collision model that makes the corresponding input pair observable when part of the output collides, and tweakable block cipher instead of block cipher. Among other things, the latter model has a high affinity with existing symmetric-key ciphers design theories, and with proper design, it is possible to reduce the delay by half compared to conventional methods. (Figure 1)

In this research, NTT proposed a specific cache random function SCARF, designed using the Enc-then-Dec model. The design of SCARF takes advantage of NTT's long-standing expertise in symmetric-key encryption design. While existing low-latency block ciphers require a latency of 560 ~ 630 ps in 15 nm technology, SCARF achieves about half the latency of 305.76 ps in the same environment. This halving is achieved by using design technology that makes the most of the Enc-then-Dec model.

Future Development:

The cache random function SCARF is designed to fit many current cache architectures. On the other hand, some architectures are not compatible with SCAR. Generalization of the SCARF structure is expected to accommodate a wider range of architectures. NTT will continue to tailor its purpose-built encryption technology that significantly outperforms general-purpose encryption methods in a limited-use environment such as this research.

Paper Information:

Federico Canale, Tim Güneysu, Gregor Leander, Jan Philipp Thoma, Yosuke Todo, Rei Ueno, “SCARF – A Low-Latency Block Cipher for Secure Cache-Randomization,” Usenix Security 2023.

Reference:

Contention-based cache attack mechanism

Assuming the attacker's goal is to know if the attack target used address A or not. The attacker selects an address from their physical address space [5] that conflicts with the cache index used by address A, replaces the cache which has the index with one from their address space and waits for the target to execute access. After the attack target executes, the address selected above is accessed again by the attacker to measure the delay to respond. If the attack target used address A, delay becomes significant because the cache of the target index has been replaced by that of address A. On the other hand, if the attack target does not use address A, the attacker can get results with low delay. In this way, the attacker could snatch information if the target used address A or not. (Figure 2)

※Usenix Security 2023: One of the world's most notable international conferences on practical computer security and network security technologies.

URL: https://www.usenix.org/conference/usenixsecurity23

Glossary:

- Cache index:

This value corresponds to the address in the cache used to allocate data from the main storage to the cache. Typically, a portion of the physical address is used for cache index. - Tweakable block cipher:

One extension of block ciphers. In addition to inputs and outputs of block ciphers, an additional value (which an attacker can also observe and select) is input, and an independent block cipher is generated every change of adjustment value. “Skinny” is one of the tweakable block ciphers proposed and standardized by NTT. - Common-key encryption:

An encryption method that uses a common key for encryption and decryption. It is now widely used in everything from data communication to storage. - Block cipher:

A type of symmetric-key encryption that inputs a private key and a fixed-length message and outputs a fixed-length ciphertext. The security of block ciphers is discussed on the assumption that attackers can observe and select messages and ciphertexts. “Camellia” is one of the block ciphers proposed and standardized by NTT. - Physical address space:

In computer memory management, it is called a physical address that directly represents the physical location where data is stored. Physical address space refers to the entire area of memory recognized by physical addresses.

About NTT

NTT contributes to a sustainable society through the power of innovation. We are a leading global technology company providing services to consumers and business as a mobile operator, infrastructure, networks, applications, and consulting provider. Our offerings include digital business consulting, managed application services, workplace and cloud solutions, data center and edge computing, all supported by our deep global industry expertise. We are over $100B in revenue and 330,000 employees, with $3.6B in annual R&D investments. Our operations span across 80+ countries and regions, allowing us to serve clients in over 190 of them. We serve over 75% of Fortune Global 100 companies, thousands of other enterprise and government clients and millions of consumers.

View source version on businesswire.com:https://www.businesswire.com/news/home/20230816297198/en/

CONTACT: Media Contacts

NTT Service Innovation Laboratory Group

Public Relations, Planning Department

nttrd-pr@ml.ntt.comNick Gibiser

Account Director

Wireside Communications

For NTT

ngibiser@wireside.com

KEYWORD: JAPAN NORTH AMERICA ASIA PACIFIC

INDUSTRY KEYWORD: TECHNOLOGY MOBILE/WIRELESS SECURITY OTHER TECHNOLOGY TELECOMMUNICATIONS BLOCKCHAIN SOFTWARE NETWORKS DATA MANAGEMENT

SOURCE: NTT Corporation

Copyright Business Wire 2023.

PUB: 08/16/2023 08:07 AM/DISC: 08/16/2023 08:06 AM

http://www.businesswire.com/news/home/20230816297198/en