Apple will let you clone your voice on your iPhone

Ahead of Global Accessibility Awareness Day on 18 May, Apple has unveiled a host of new iOS features that will be coming to the iPhone, iPad and Mac later this year. The suite of updates announced on Tuesday include accessibility features to help those with cognitive, vision, hearing and mobility disabilities and, for the first time, new tools for those who have lost their ability to speak or are at risk of losing their voice. In the future update, users with a speech disability or a recent ALS diagnosis will be able to use their Apple device to create a digital voice that sounds exactly like them. The feature, called Personal Voice, can be trained in 15 minutes simply by reading a bunch of randomly generated text prompts, and uses on-device machine learning to ensure your data is kept private and secure. The Motor Neurone Disease Association suggests that voice banking takes an average of two hours or longer with current tech, and can cost hundreds of pounds. The Independent got a first look at the new feature in action, and the Personal Voice sounded remarkably similar to the user’s actual voice, albeit with a slightly robotic, synthesised tone. Personal Voice will be available for use with Apple’s new Live Speech feature on iOS, which will let users type what they want to say and have it spoken out loud, during in-person conversations, as well as with phone and FaceTime calls. The feature is said to work with all accents and dialects. As well as features for speech accessibility, Apple announced a cognitive disability setting for the iPhone and iPad called Assistive Access, which essentially lets you pare your device down to a few core apps of your choosing. Arranged in a grid or a list, it simplifies the user interface down to large, easily readable buttons, so you can make calls, access an emoji-only keyboard, and a fuss-free camera, without the clutter. Companies such as Doro and Jitterbug currently have a stranglehold on the “simple phone”, offering devices for senior users that strip away the bulk, and deliver a simple user interface with large high-contrast buttons that make it easy to use a phone. With Apple’s new Assistive Access feature, those with cognitive disabilities will be able to take advantage of a mainstream iPhone device and its features without having to opt for an Android device or one designed for their specific need. In addition, Apple previewed a new feature in the Magnifier app for low vision users called Point and Speak, which makes use of the camera, the LiDAR Scanner and on-device machine learning to read aloud text that a user places their finger on. Plus, the company announced Mac support for Made for iPhone hearing devices, phonetic suggestions for Voice Control users and the ability for Switch Control users to turn their switches into game controllers on the iPhone and iPad. Apple has made it a tradition over the years to unveil new accessibility features ahead of WWDC in June, where it usually reveals the next iOS update, emphasising its prioritisation of accessibility within the iOS ecosystem. While Apple didn’t state when exactly the new updates would be coming to its devices later this year, a rollout alongside iOS 17 seems likely, given previous announcements. Read More Apple iPhone 15 rumours: All you need to know, from release date to price and specs iPhone 15 to add mysterious new button on the side, Apple rumours suggest Inside Apple’s Developer Academy, where future app developers are being taught to put disability first 75% of Irish data watchdog’s GDPR decisions since 2018 overruled – report AI pioneer warns UK is failing to protect against ‘existential threat’ of machines Apple highlights work of small developers amid ongoing questions about the App Store

Ahead of Global Accessibility Awareness Day on 18 May, Apple has unveiled a host of new iOS features that will be coming to the iPhone, iPad and Mac later this year.

The suite of updates announced on Tuesday include accessibility features to help those with cognitive, vision, hearing and mobility disabilities and, for the first time, new tools for those who have lost their ability to speak or are at risk of losing their voice.

In the future update, users with a speech disability or a recent ALS diagnosis will be able to use their Apple device to create a digital voice that sounds exactly like them. The feature, called Personal Voice, can be trained in 15 minutes simply by reading a bunch of randomly generated text prompts, and uses on-device machine learning to ensure your data is kept private and secure.

The Motor Neurone Disease Association suggests that voice banking takes an average of two hours or longer with current tech, and can cost hundreds of pounds.

The Independent got a first look at the new feature in action, and the Personal Voice sounded remarkably similar to the user’s actual voice, albeit with a slightly robotic, synthesised tone.

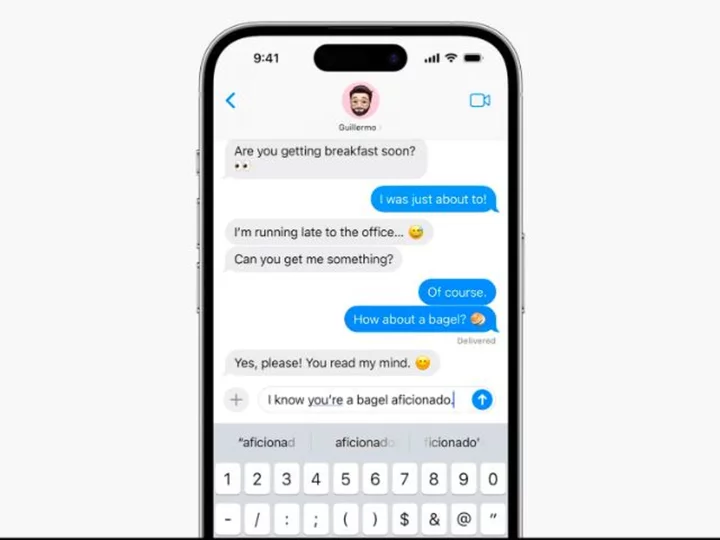

Personal Voice will be available for use with Apple’s new Live Speech feature on iOS, which will let users type what they want to say and have it spoken out loud, during in-person conversations, as well as with phone and FaceTime calls. The feature is said to work with all accents and dialects.

As well as features for speech accessibility, Apple announced a cognitive disability setting for the iPhone and iPad called Assistive Access, which essentially lets you pare your device down to a few core apps of your choosing. Arranged in a grid or a list, it simplifies the user interface down to large, easily readable buttons, so you can make calls, access an emoji-only keyboard, and a fuss-free camera, without the clutter.

Companies such as Doro and Jitterbug currently have a stranglehold on the “simple phone”, offering devices for senior users that strip away the bulk, and deliver a simple user interface with large high-contrast buttons that make it easy to use a phone. With Apple’s new Assistive Access feature, those with cognitive disabilities will be able to take advantage of a mainstream iPhone device and its features without having to opt for an Android device or one designed for their specific need.

In addition, Apple previewed a new feature in the Magnifier app for low vision users called Point and Speak, which makes use of the camera, the LiDAR Scanner and on-device machine learning to read aloud text that a user places their finger on. Plus, the company announced Mac support for Made for iPhone hearing devices, phonetic suggestions for Voice Control users and the ability for Switch Control users to turn their switches into game controllers on the iPhone and iPad.

Apple has made it a tradition over the years to unveil new accessibility features ahead of WWDC in June, where it usually reveals the next iOS update, emphasising its prioritisation of accessibility within the iOS ecosystem.

While Apple didn’t state when exactly the new updates would be coming to its devices later this year, a rollout alongside iOS 17 seems likely, given previous announcements.

Read More

Apple iPhone 15 rumours: All you need to know, from release date to price and specs

iPhone 15 to add mysterious new button on the side, Apple rumours suggest

Inside Apple’s Developer Academy, where future app developers are being taught to put disability first

75% of Irish data watchdog’s GDPR decisions since 2018 overruled – report

AI pioneer warns UK is failing to protect against ‘existential threat’ of machines

Apple highlights work of small developers amid ongoing questions about the App Store